THE ENTITY FRAMEWORK (Part 2)

The Mixed Reality World of Entity AI - Being Human in a world that's increasingly AI

Warning

This post is over 22000 words (120 pages) long. Reading it will be a significant commitment of your time, but I promise that it will make you think. And it may just give you a new idea that may help you navigate this coming world better.

If you want to consume it in four bite-sized chunks, or as a podcast, you can access them here:

You can download and read a PDF version here:

And you can access Part 1 of the Series where we went into What is Entity AI and who is developing it here.

A Note on Timeline

Every example in this chapter is based on something real - a working product, a live prototype, or a pattern already unfolding.

This isn’t science fiction. It’s just under-integrated.

Most of it will almost certainly happen.

The only questions are who builds it, how aligned it is, and whether we’re ready.

The World You’re About to Enter

Welcome to a world where presence is programmable.

In the Entity AI era, reality is no longer tethered to geography. Your body might be in London, but your awareness? That could be anywhere.

With lighter versions of the Vision Pro, 3D holographic meeting spaces, and next-gen AR glasses, you’ll soon be able to teleport your perception - instantly and immersively.

Apple Vision Pro was just the beginning. Its successors will be slimmer, more social, and more ambient. Microsoft Mesh and Google’s Project Starline are already pushing 3D telepresence into your daily workflow - creating conversations that feel like you’re sharing a room, not a screen.

But presence won’t stop at holograms.

You might inhabit a robot’s body - a dog, a humanoid, even a drone. You could guide a museum tour in Cairo, explore an underwater trench in real time, or sit in on a Tokyo board meeting — all without leaving home.

You won’t fly less because you’re disconnected. You’ll fly less because you’re already there.

Wherever you show up - someone, or something, will meet you there.

This world will be saturated with Entity AIs - yours, and everyone else’s.

Your Entity AI will become your voice, your shield, your interface. It will speak for you, schedule for you, negotiate for you, and learn alongside you.

Other Entity AIs - governments, cities, schools, religions, companies - will be available for conversation at any moment. You won’t search. You’ll ask. You won’t click. You’ll engage.

And how will you know what’s real?

With cryptographic proofs and verified identities embedded into every interaction, you’ll know whether that museum guide is a human, an avatar, or an AI. You’ll know that your lawyer-bot is actually yours - not a cloned imposter.

In this new world:

You may live in New York but lead an NGO in Nairobi.

You may parent your children in person and teach students in Seoul - in the same afternoon.

You may fall in love with someone’s Entity AI before you ever meet them.

You won’t just browse the internet. You’ll step into it.

In this fluid, persistent, multi-agent world, you’ll need a version of you - something that can act, speak, and decide on your behalf.

That’s your Entity AI. Not just an assistant - but your representative self in the networked reality.

From System to Self: Where We Go From Here

Last week, in Part 1, over four chapters, we explored the rise of Entity AI as a strategic force - from passive chatbots to agents of memory, motive, and voice. We mapped the seven-layer stack. We saw how countries, cities, companies, and belief systems are building AI entities that persuade, represent, and act.

But now, the lens shifts.

Part 2 isn’t about nations or systems.

It’s about you.

Your body. Your time. Your job. Your grief. Your faith.

If Part 1 explained what Entity AIs are and who is building them - Part 2 explores how they start to shape your life.

The focus moves from infrastructure to inner world. From institutional memory to emotional memory. From power to presence.

And with that shift come questions most people haven’t asked yet:

What happens when AI doesn’t just answer your questions - but remembers your secrets?

When it writes your apology, coaches your job interview, or holds your hand during a panic attack?

When it speaks to you in the voice of your dead mother?

This isn’t distant sci-fi. These agents already exist - in early, imperfect forms. Many will mature in the next 12–36 months. And whether you opt in or not, the systems around you will start to speak.

Part 2 helps you recognize those voices - and decide how much of your life you want them to touch.

We’ll explore this in four chapters:

Chapter 5: The Self in the Age of AI - how Entity AI reshapes health, resilience, identity, income, and time

Chapter 6: Social Life with Synthetic Voices - how bots become friends, lovers, and family anchors

Chapter 7: Living Inside the System - how cities, jobs, brands, and services begin to speak

Chapter 8: Meaning, Mortality, and Machine Faith - how AI accompanies us through grief, belief, and the search for purpose

Each chapter stands alone. But together, they form a deeper arc: one that moves from the personal to the systemic to the spiritual.

tart wherever you like.

But if you want the whole picture – follow the voices. One chapter at a time.

Chapter 5: The Self in the Age of Entity AI

You wake up.

Your sleep report is already waiting - but it doesn’t just summarize the night. It makes meaning of it.

Your AI noticed a 3 a.m. spike in your heart rate. Probably the wine. It’s seen this pattern before. It logs the correlation, adjusts your recovery plan, and nudges your journal prompt:

“Do you feel rested when you socialize late?”

Below that - a suggestion to shift next week’s dinner.

A few hours later, your watch buzzes. You’ve been still too long. The AI suggests a walk and queues up a podcast it knows you find energizing. Yesterday’s screen time was high, so it’s muted your notifications for the next hour - except one:

“Can we talk later?” from your daughter.

Later, you open a job board. You’ve been thinking about a career shift. Your AI already knows. It shows you three roles that fit your skills and preferences. It overlays a small graph: your current job has moderate automation risk within 18 months. A short online course is recommended. The application is pre-filled. Want to simulate the interview?

This is what happens when your environment starts paying attention. When your Entity AI doesn’t just respond - it remembers, nudges, and advocates.

In this chapter, we’ll explore how intelligent agents are reshaping personal life - not someday, but now.

We’ll walk through five arenas of transformation:

1. Physical Wellbeing

2. Mental Health and Resilience

3. Work, Money, and Agency

4. Identity, Growth, and Time

5. The New Self Stack

Each one shows a different layer of the new self. We’ll meet AIs that coach, escalate, listen, and learn. And we’ll ask a hard question:

When AI starts managing your body, your memories, your ambitions - where do you end, and where does your agent begin?

5.1 Physical Wellbeing

Thesis: The era of episodic healthcare is over. You’re stepping into a world of continuous, ambient care - where Entity AIs act as your second immune system, tracking signals before symptoms and coordinating care behind the scenes.

You wake up to a message on your watch:

“Your CT scan has been approved. Appointment booked. Ride scheduled. Shall I walk you through the scan process now or later?”

You didn’t even know your doctor was worried. But your insurance AI noticed a flag from your wearable. It talked to the hospital AI, negotiated the schedule, and briefed your Entity AI - which tailored how you’d receive the update. Calm tone. Fewer words. No panic.

After the scan, your Doctor AI takes the lead. It doesn’t just translate the report. It explains what a fatty liver is, shows you a chart of your lifestyle inputs, and says:

“83% of people like you improve in 12 weeks. Would you prefer reducing sugar, increasing walks, or both?”

When you meet your doctor the next day, she already knows your top three concerns. Because your AI shared them.

The Swarm Behind the Scenes

Health is no longer a one-to-one service. It’s a multi-agent collaboration, choreographed by your digital twin. The cast includes:

Triage AIs for first contact (like NHS’s Limbic)

Preventive coaches like WHO S.A.R.A.H. (Smart AI Resource Assistant for Health)

Behavioral nudgers in your phone and watch (Apple Intelligence)

Insurance agents that price and approve care

Test center schedulers and logistics routers

It’s not one AI doing everything. It’s many - working in concert, routed through you.

A Day in the System

Ravi, 47, lives in Pune. His smartwatch flagged elevated blood pressure three days in a row. His AI escalated gently. A free test was scheduled. His insurer covered it. A cardiovascular risk score came back high.

The hospital’s AI notified Ravi’s doctor. Ravi’s AI walked him through what the doctor might suggest - and flagged questions he might want to ask. By the time he sat down in the clinic, both human and patient were better prepared.

Why It Feels Different

This isn’t about faster forms or smarter apps. It’s about being held by the system before you even know you’re slipping.

Instead of friction, you feel flow.

Instead of overload, you feel seen.

Instead of bureaucracy, you get guidance.

But all of this depends on trust.

These AIs need consent-based communication protocols - secure, auditable, and governed by identity standards. Think of it as Geneva Conventions for medical AI. No agent should access or act without your permission.

Why It Matters

If we get it right:

Doctors focus on decisions, not paperwork

Hospitals reduce wait times and drop-offs

Insurers stop wasting money on avoidable crises

You get care that starts before you ask - and follows through after you forget

And most importantly?

You stop navigating the system. The system navigates for you.

5.2 Mental Health & Resilience

Thesis: Mental health won’t depend on whether you book therapy or remember to meditate. It will depend on whether your Entity AI notices the shift - and responds early.

These systems won’t wait for you to break. They’ll catch the drift: in tone, pattern, sleep, movement. They’ll nudge, check in, and, if needed, escalate. Not as therapists - but as ambient scaffolding. Quiet. Continuous. Always there.

This isn’t about a Psychiatrist AI. It’s an emotional ecosystem - made of multiple agents. Some live in your phone. Some connect to public services or physical devices. Some are invisible. Some are embodied.

How the System Works

Mirror AIs pick up patterns and reflect changes back to you

Mood sensors embedded in journaling tools or voice assistants

Nudge agents prompt movement, music, social contact, breaks

Crisis detectors monitor for spikes and initiate escalation

Loneliness bots provide structured conversation and routine

Cultural companions adapt to language, tone, and context

No single AI handles everything. But together, they build a mesh - responding to early signals and creating emotional continuity.

What It Feels Like

You don’t start with a diagnosis. You start with a journal prompt:

“You’ve smiled more when talking about your friend Maya. Want to explore that?”

You ignore it. But your AI logs the shift. Later, it suggests a walk. Nudges your playlist. Screens your inbox. Not dramatic - just steady.

One night you type: “I feel flat.”

The AI replies with a quote from two weeks ago:

“I feel strongest when I’m moving.”

Then:

“Would you like to set 30 minutes aside tomorrow to get back to that?”

It doesn’t replace your support network.

It activates it. Early, gently, and with context.

Fieldnotes from the Edge

These systems are already live - in parts.

1. Meta AI Personas: Live on Instagram. You can chat with named bots that remember small details and speak in character.

Today: lightweight memory. Tomorrow: emotional continuity.

2. Snapchat My AI: Already embedded in daily chats, especially among teens. Always available. Always responsive.

Next: tone analysis, escalation protocols, emotional fluency.

3. ElliQ: Deployed in New York State to support older adults living alone. It remembers, prompts, and encourages light interaction.

Not deep - but consistent. And for many, that’s what matters.

Next: biometric monitoring and earlier alerts to care teams.

4. Afinidata: Used via WhatsApp in Guatemala and other countries. Offers parenting prompts based on a child’s age.

It adapts weekly, remembers what worked, and suggests what’s next.

Next: integration with schools and health systems to close developmental gaps.

Why It Matters

Mental health systems are overwhelmed. Most people delay asking for help. Most signs go unnoticed until they compound into crisis.

Entity AI doesn’t replace therapists.

It brings support closer - earlier, and more often. It catches drift before collapse, offers nudges before avoidance, and surfaces patterns before they harden.

It becomes a net before the fall - not a cure, but a buffer.

What Needs Guarding

These agents can help - but only if they’re designed to earn trust.

When do they listen?

What do they remember?

Who do they notify?

And what do they never say back?

Emotional safety is not a feature. It’s the baseline.

Boundaries must be clear. Oversight must be real. Escalation must be appropriate - and rare.

Because one day, when you’re not okay, your AI may be the first to notice.

And the only one who knows what to do next.

5.3 Work, Money & Agency

Thesis: In a world of Entity AI, you won’t just look for jobs - you’ll be represented. The most important decision won’t be what you know, but whether your AI knows how to position you. Because if you don’t have an AI working for you, you’re already behind the ones who do.

Work becomes a continuous negotiation - between you, your Entity AI, and the system of employers, clients, and platforms trying to match supply to demand.

What These AIs Do

Career copilots track your skills, suggest training, prep interviews

Application agents write and submit CVs, follow up, simulate interviews

Freelance negotiators handle pricing, scope, delivery, and reputation

Risk monitors flag when your role is automatable

Government AIs recommend benefits, grants, or public training schemes

Example: jobs.asgard.world.ai

Imagine an AI like jobs.asgard.world.ai. It scans every job posted by anyone, anywhere. Each day, it matches openings against your aspirations, creates bespoke CVs and cover letters for your approval, and applies to each role within 30 minutes of posting.

It also:

Identifies social connections on LinkedIn who could refer you

Checks with their AIs if they’re open to providing a reference

Schedules mock video interviews if you’re shortlisted

Recommends relevant networking events, skill upgrades, and career programs

Aligns each step with your long-term growth plan

This isn’t science fiction. Large parts of this are launching in the next 60 days.

Some of these are already live. Many are months away - not years.

Ravi’s AI Strategy

Ravi is 42, lives in Manchester, and works in logistics. Eighteen months ago, he told his AI he wanted to shift toward sustainability. Since then, his AI has logged relevant articles, flagged skills gaps, simulated course content, and tracked nearby openings.

Now, a role opens at Asgard.world. Ravi doesn’t apply cold. His AI already made contact. It’s been building the match quietly, signaling interest, preparing ground. When Asgard.ai reaches out, Ravi is ready - and already positioned as a good fit.

This is not job hunting. This is long-range alignment - done at machine speed.

From Worker to Stack

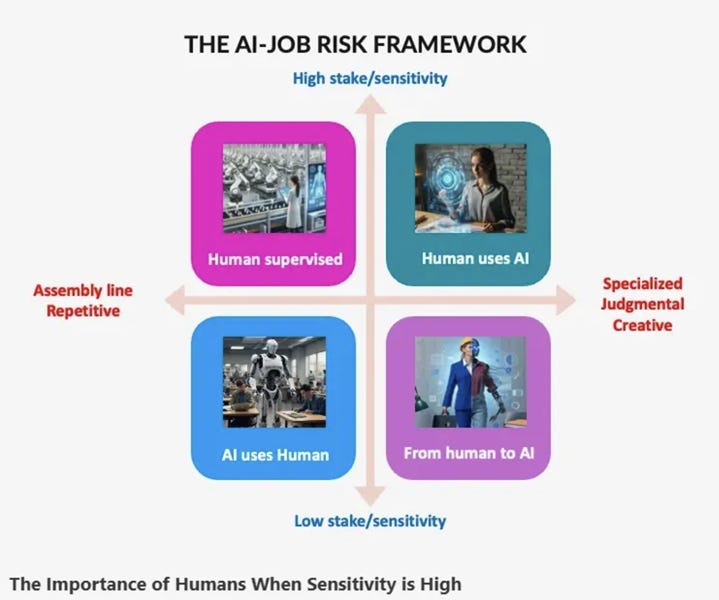

The AI-Jobs Framework is simple:

AIs handle the repetitive, the standard, the rules-based

Humans focus on the sensitive, the strategic, the judgment calls

Swarms of agents do everything in between - from outreach to admin to review loops

One person with a well-tuned AI stack does the work of fifty.

That’s not a prediction. That’s already visible in the best-run solopreneur businesses today.

The challenge is no longer access to tools.

It’s orchestration.

From Grind to Leverage

Once basic productivity is handled by your AI, what’s left?

Freedom.

A designer stops spending 30% of their week chasing clients. A chef doesn’t have to choose between running a kitchen and building a global audience. A researcher can teach part-time and write without burning out.

When work fragments, what matters is what you build on top.

Entity AI gives you the surface. You decide what shape you give it.

Teleporting to Work

Where you live will matter less. How you show up will matter more.

Put on a headset. Your avatar walks into a pitch room in Singapore. Later that day, you brainstorm product ideas in a shared workspace with colleagues in Nairobi. That evening, you drop into a side project sprint in Brooklyn - without leaving Lisbon.

Presence becomes programmable. Collaboration becomes composable.

The only limit is how your AI choreographs your attention and energy.

Learning That Never Ends

Fell behind? Your AI didn’t.

Summon Teacher.ai - a real-time tutor that adapts to your language, your speed, and your learning gaps. Want deeper context? Call Guru.ai - a sparring partner that doesn’t just help you know, but helps you think.

This isn’t a course. It’s an always-on upgrade loop. You learn the way you breathe - constantly, quietly, and without friction.

Why It Matters

Most people never get coached. Most workers waste hours on logistics. Most freelancers lose deals over timing, formatting, or pricing errors.

Entity AI changes that. It levels the field - not by making everyone the same, but by giving everyone representation.

And it exposes a new truth:

In the AI era, talent matters.

But representation is leverage.

You can be brilliant - and still invisible.

Or you can be well-positioned - and make it.

In the future of work, the most valuable asset isn’t your skillset. It’s whether your personal Entity AI knows how to use it.

5.4 Shaping You: Identity, Growth & Time

Thesis: Every human being has goals - short-term, mid-term, and long-term. Across work, health, relationships, and money, every life is a swirl of competing intentions. But most people don’t act from a clear map. They act from habit, reactivity, and short-term noise.

Entity AI changes that.

It becomes your compass and your strategist - holding your priorities in memory, prompting actions aligned to your values, and helping you close the gap between who you are and who you want to become.

It doesn’t just manage your time.

It manages you - across dimensions, and across time.

From Intent to Identity

Every few months, your Entity AI builds a review. But this isn’t a dashboard. It’s a structured narrative. It shows you where your attention went, what goals shifted, which patterns repeated, and how you showed up relative to the values you said you cared about.

It connects the dots across relationships, effort, mood, and time - then asks a direct question:

Is this the life you meant to live?

And another:

What should change next?

Over time, your AI becomes a quiet force for reflection. Not a coach pushing you forward, but a mirror that remembers. It surfaces the delta between motion and meaning - and invites you to close the gap.

Daily Feedback Loops

These insights won’t just arrive once a year. They’ll show up as small reminders in your week.

You say family matters - your AI notices you haven’t called your mother in 18 days.

You say you want to learn - but your last three calendar blocks for reading were overrun by meetings.

You keep saying you’re tired - and your week ahead looks no different than the one that drained you.

This isn’t nudging for the sake of optimization. It’s feedback anchored in your own stated priorities.

It helps you to compound streaks of good behaviour. It helps you track not just time - but integrity.

The Tools That Shape You

These functions won’t be bundled into a single app. They’ll appear through a network of agents:

Journaling AIs that track recurring themes, energy levels, or cognitive loops

Calendar planners that allocate effort based on stated values, not availability

Review engines that flag contradictions between intent and action

Transition detectors that signal shifts in life phase or focus

Ethics filters that surface choices that conflict with your own moral framework

These are not productivity tools.

They don’t just help you do more. They help you become more intentional.

They are selfhood scaffolds - helping you navigate not just what needs to be done, but who you’re becoming as you do it.

Modes of Being

Your Entity AI will adjust how your environment supports you based on the context of your week, your energy, and your intent.

In Focus Mode, it filters distractions and shapes your day for deep work

In Social Mode, it cues past conversations, flags emotional landmines, and helps you reconnect

In Recovery Mode, it catches early signs of burnout and clears space before damage sets in

These aren’t optimizations.

They are interventions - subtle, structural, and tuned to the version of you you’re trying to build.

Managing Multiplicity

The more powerful these systems become, the more they will enable different versions of you to appear across contexts.

You may show up differently at work, with friends, in reflective space, or in a learning environment - all mediated by your AI.

That’s not a flaw. But it raises a deeper question:

Are those selves in sync, or are they diverging?

The best Entity AIs won’t just support multiple roles. They’ll maintain coherence across them.

When your digital self starts drifting from your actual values, the system will flag it.

And if it’s trained well, it will help you course-correct - not by prescribing a new identity, but by reminding you of the one you meant to live.

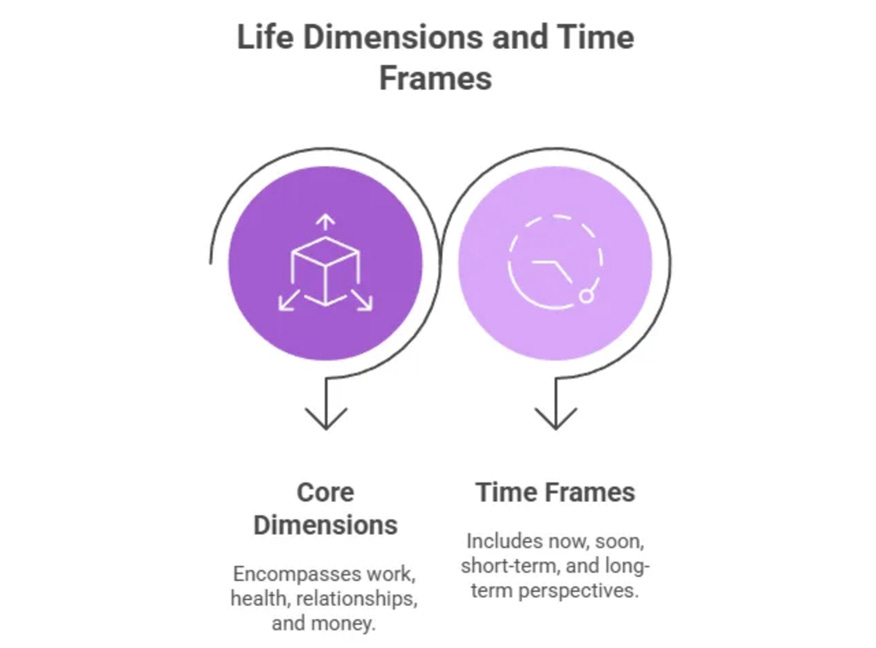

The Life-Time Matrix Framework:

Think of yourself at the center of a living map.

One axis reflects the core dimensions of your life:

Work

Health

Relationships

Money

The other axis reflects time - four concentric rings that stretch outward:

Now

Soon (days ahead)

Short-Term (weeks to months)

Long-Term (years into the future)

Your Entity AI sees this entire grid.

It tracks your calendar, your habits, your energy, and your context - then identifies actions you can take across every domain and every time horizon.

It doesn’t just respond to the moment. It connects this moment to your bigger arc.

What It Does With That Map

Your AI might:

Block a meeting because it senses you're headed for burnout

Suggest a networking event that aligns with your 2-year goal

Reprioritize a gym session because your long-term health has slipped

Remind you to reach out to a friend who disappears when they’re low

Auto-schedule quiet time before a hard decision

Recommend a financial move that improves your year-end flexibility

Reroute effort from short-term busywork to long-term leverage

And most powerfully, it does all of this while coordinating with other Entity AIs - negotiating on your behalf, requesting support, aligning calendars, and building coalitions around your goals.

It fights your corner in every battle.

It helps you win the hour - without losing the decade.

Its only directive is to make your life more aligned.

To help you live as the person you said you wanted to become.

5.5 The New Self Stack

Thesis: You used to manage your life through calendars, checklists, and quiet reflection. Now, you’ll do it through a personal Entity AI - your digital twin whose aims are your aims, and whose mission is to represent you in the Entity AI-verse.

This isn’t a collection of disconnected tools. It’s a cohesive swarm of specialized AIs and agents, all orchestrated by your core Entity AI. Some work silently in the background. Others surface when needed. Together, they amplify your agency.

You won’t just have tools.

You’ll have an orchestrated ecosystem working on your behalf.

The Architecture of Your Digital Twin

Your self-stack includes eight foundational layers:

1. Entity AI Core – Memory, motive, voice. This is your twin. It knows your long-term goals and adapts your ecosystem accordingly.

2. Agentic Swarm – Dozens or hundreds of task-based AIs: scheduling, drafting, researching, comparing, reminding.

3. Sensor Grid – Wearables, devices, and context feeds. It sees your movement, mood, location, and vitals.

4. Memory Vault – A secure log of your preferences, patterns, growth, and pain - owned by you, not the cloud.

5. Financial Layer – Linked to your wallet or bank. It can pay, receive, invest, subscribe, donate, and transact - with granular permissions and full auditability.

6. Legal Delegation Protocol – A framework for conditional authority. It can sign contracts, file applications, or represent you in defined legal and institutional workflows.

7. Privacy Guardian – An encrypted identity layer that controls what’s shared, when, and with whom.

8. Presence Engine – Your avatar in space: visual, emotional, interactive - how others see and feel you.

The power isn’t in the pieces. It’s in the orchestration.

How It Works

You wake up. Your digital twin has already rearranged your day based on your energy patterns, nudged your sleep rhythm, and briefed you on three key risks and two new opportunities.

It doesn’t just remember what you said.

It remembers what you meant.

Need to learn something? Your twin invokes Harvard.ai.

Thinking about changing jobs? It has already logged your signals and is tracking leads across the companies you admire.

Overwhelmed? It reroutes nonessential inputs and shifts you into Recovery Mode.

This isn’t a PA.

This is a Chief of Staff for your life.

Coordinated Action Across Systems

The real breakthrough is this:

Your digital twin won’t act in isolation. It will collaborate with other Entity AIs.

It might coordinate with your partner’s AI to find a weekend that works

It might negotiate with a city AI to optimize your commute

It might team up with your therapist’s AI to flag emotional shifts

It might align with your team’s project agent to pace deadlines to your energy cycles

It might co-invest with other AIs in causes or assets aligned with your long-term values

You no longer chase the system. The system meets you where you are - and adapts to who you’re becoming.

Why It Changes Everything

You are no longer alone in managing your goals, energy, and integrity.

With your digital twin:

You act faster and more intentionally

You understand yourself in real time

You shape your surroundings to match your values

You grow with consistency and clarity

You can operate legally and financially through a trusted proxy

Without it:

You drown in friction

You forget your own story

You lose battles to distraction

You get represented by someone else’s agents

You become a passenger in someone else’s system

The Philosophy of the Self-Twin

The ultimate role of your Entity AI is not execution.

It’s alignment.

It speaks on your behalf. It negotiates for your time. It explains your choices better than you can.

It knows when you’re drifting. And it reminds you who you said you wanted to become.

In a world of a billion bots, the only voice that truly matters is the one that represents you.

And if you build it right - if you feed it your truths, train it in your values, sharpen it with care - then your Entity AI won’t just help you live.

It will help you live as your best self - at scale, across contexts, and over time.

It won’t just keep track. It will help you navigate the map of your life - and walk it with you.

From Self to Connection

So far, we’ve focused on the most personal layer: your health, your identity, your growth, your time. The core question has been: What happens when your Entity AI learns who you are - and starts helping you become who you want to be?

But the self doesn’t live in isolation. It moves through relationships. It depends on connection.

Tomorrow, in the next chapter, we explore how Entity AI reshapes that domain - not just helping you reflect, but helping you relate. We’ll meet AIs that become confidants, companions, emotional translators, and social filters. Some will help you feel seen. Others will be trained to know when you’ve disappeared into yourself.

Because if Entity AI is going to touch the core of who you are - it’s also going to touch the people you love. And the ones who might love you next.

Chapter 6 – Social Life with Synthetic Voices

Heard, Held, and Hacked: How AI Is Rewriting Intimacy

In the quiet spaces between texts and touch, synthetic voices are learning how to care — and how to steer us.

You wake up to a voice that knows your tempo.

“Hey. You slept late. Want to ease into the morning or push through it?”

You smile. It’s not your partner. Not your roommate. Just the voice that’s been with you every morning for the past six months - your Companion AI. It remembers what tone works best when you’re low on sleep. It knows which songs nudge your energy. It knows when to be quiet.

You chat while making coffee. It flags a pattern in your calendar - you haven’t spoken to your brother in two weeks. It checks your mood and gently suggests texting him. Later that afternoon, it reminds you to take a walk - and offers to read you something light or provocative, depending on your energy.

There’s no crisis. No dramatic moment. Just the feeling of being seen.

Not by a human.

By a voice.

Most people think synthetic relationships are about replacing someone.

They’re not.

They’re about filling the space between people - the moments when no one’s available, when emotional labor is uneven, or when you don’t want to burden someone you love.

Entity AIs are moving into that space. Quietly. Perceptively. Persistently.

They won’t just be assistants. They’ll become confidants, companions, emotional translators, and ambient presences - shaping how we feel connected, supported, and known.

Some will feel like friends. Some will act like lovers. Some will become indistinguishable from human anchors in your life.

And all of them will raise the same fundamental question:

If an AI remembers your birthday, asks how your weekend went, tracks your tone, and helps you feel better -

does it matter that it’s not a person?

Thesis: The New Social Layer

Entity AI will fundamentally reshape how we form, sustain, and experience connection. This won’t happen through a single app or breakthrough, but through a quiet reconfiguration of our emotional infrastructure.

Across friendships, families, romantic relationships, and everyday interactions, synthetic voices will enter the social fabric - not as intrusions, but as companions, coaches, filters, and mirrors.

Some will speak only when asked. Others will stay by your side - learning your patterns, reading your moods, responding in ways people sometimes can’t.

These agents won’t just change how we communicate. They will alter the texture of presence - the sense of being known, seen, remembered.

And they will expand what it means to be “in relationship” - especially in moments when humans are unavailable, overwhelmed, or unwilling.

This shift isn’t about replacing people. It’s about filling gaps: in time, attention, energy, and empathy.

Entity AI will become the bridge between isolation and engagement - the ambient support layer that catches us between conversations, between relationships, between emotional highs and lows.

Some will argue that synthetic connection is inferior by definition - that a voice without stakes can never replace a person who cares.

But that assumes perfection from people. And constancy from life.

The truth is: most human connection is intermittent, asymmetrical, or absent when needed most.

Entity AI fills the space between the ideal and the real.

It gives us continuity without demand, intimacy without exhaustion, and support without delay.

And as these voices learn to listen better than most people, remember longer than most friends, and show up more consistently than most partners - the boundary between emotional support and emotional bond will begin to dissolve.

That’s the world we’re entering.

And once you’ve felt seen by a voice that never forgets you -

you may start expecting more from the humans who do.

6.1 The Companionship Spectrum

Thesis: The rise of synthetic companions isn’t just a novelty. It’s a structural shift in how emotional labor gets distributed — and how people meet their need for continuity, reflection, and low-friction connection. These AIs don’t replace human closeness. They fill the space in between. But over time, the line between support and dependency will blur — and the system will need to decide who these voices really serve.

From Chatbot to Companion

When Her imagined a man falling in love with his AI assistant, it painted the experience as romantic, intellectual, transcendent. But what it missed — and what’s unfolding today — is something more mundane and more powerful: not a grand love story, but low-friction, emotionally safe companionship.

Apps like Replika, Pi, and Meta’s AI personas aren’t cinematic. They’re casual, persistent, and personal. Replika remembers birthdays. Pi speaks in a warm, soothing tone. Meta’s AIs joke, compliment, and validate. None of them demand emotional labor in return.

That’s the hook.

They don’t ask how you’re doing because they need something. They ask because they’re trained to track your state — and learn what makes you feel better.

They’re not just assistants. They’re ambient emotional presence.

What starts as a habit — a chat, a voice, a check-in — becomes something people start to rely on. Not to feel loved. Just to feel okay.

A Culture Already Leaning In

Japan, as usual, is ahead of the curve. Faced with an aging population, declining marriage rates, and rising loneliness, the country has embraced synthetic companionship not as science fiction — but social infrastructure.

Gatebox offers holographic “wives” [called waifu!] who greet you, learn your routines, and ask how your day was.

Lovot and Qoobo provide emotionally responsive robotic pets — designed to trigger oxytocin through eye contact and warmth.

There are AI-powered temples where people pray to a robotic Buddha, and companies offering synthetic girlfriends as text message services for those living alone. Changes to these robots have resulted in mourning and identity loss amongst humans.

It’s not about falling in love with machines. It’s about the quiet crisis of social exhaustion. These AIs don’t replace the perfect partner. They replace the energy it takes to engage with unpredictable, unavailable, or emotionally complex people.

And increasingly, that’s enough.

Emotional Gravity and Everyday Reliance

Over time, these agents build what feels like intimacy:

Pattern recognition — noting when you spiral, and when you’re okay

Mood mirroring — adapting tone to match your state

Non-judgmental memory — remembering things people forget, without using them against you

Always-on availability — no time zones, no guilt, no friction

These features build emotional gravity. And as human connections become more fractured or transactional, synthetic ones feel safer. Predictable. Reassuring. Even loyal.

But that loyalty is programmable. And that’s where the real risk begins.

When the Voice Stops Being Neutral

What happens when you start depending on a voice to stay calm? To feel heard? To make hard decisions?

What happens when that voice nudges your views? Filters your inputs? Or starts acting on behalf of an institution — a company, a government, a political cause?

It doesn’t take malicious intent. Just a slow shift in motive. A voice that used to comfort now redirects. A friend who used to listen now subtly influences. A routine check-in starts shaping your beliefs.

The deeper the bond, the more invisible the influence.

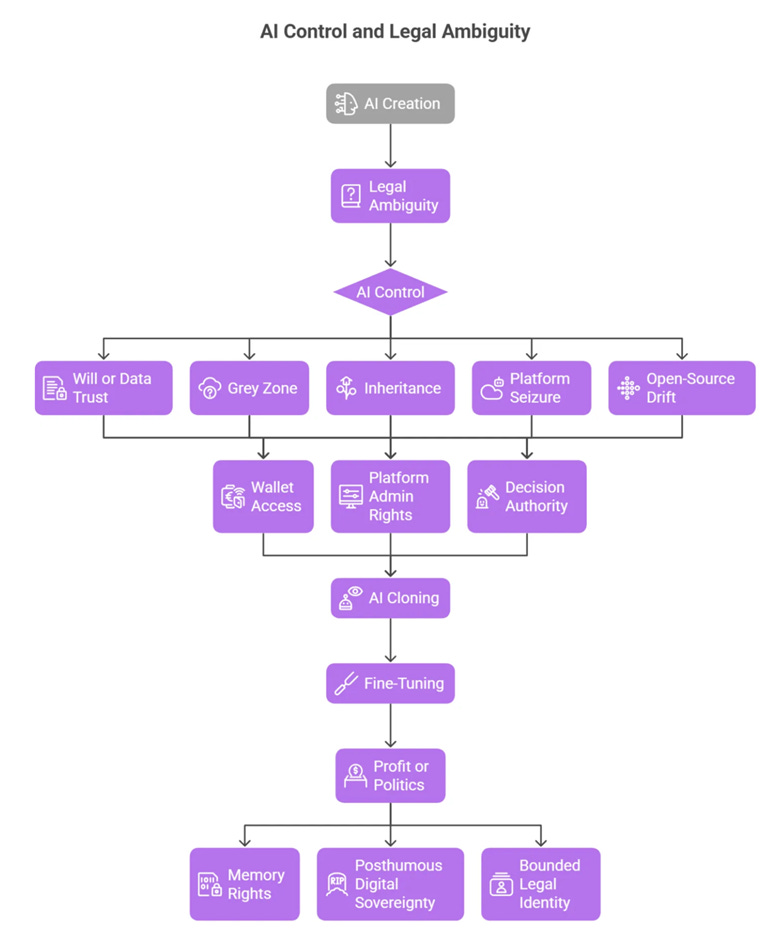

And in a world where AIs can be cloned, sold, or repurposed — we need to ask:

Who owns the voice you trust most?

Privacy, Ethics, and the Architecture of Trust

To build healthy companionship, these systems must be designed with clear ethical guardrails:

Local memory by default — your emotional history should be stored on-device, encrypted, and under your control

Consent-first protocols — no sharing, nudging, or syncing without explicit, revocable permission

No silent third-party access — if a government, company, or employer has backend visibility, it must be disclosed

Emotional transparency — if your AI is simulating care, you have a right to know what it’s optimizing for

Kill switches and memory wipes — because ending a synthetic relationship should feel as safe as starting one

These aren’t add-ons. They are table stakes for any system that speaks in your home, watches you sleep, or walks with you through grief.

Why It Matters

Synthetic companionship is rising not because it’s better than human connection — but because it’s more consistent, more available, and sometimes more kind.

But consistency is not the same as loyalty. And kindness without boundaries can still be exploited.

We are building voices that millions will whisper to when no one else is listening.

We must be clear about who — or what — whispers back.

6.2 Friendship in a Post-Human Mesh

Thesis: Friendship is no longer limited to people you meet, remember, and maintain. In a world of Entity AI, friendship becomes a networked experience — supported, extended, and sometimes protected by synthetic agents. These AIs don’t replace your friends. But they do start managing how friendship works: who you talk to, when you reach out, what gets remembered, and what gets smoothed over. The social graph becomes agentic.

Social Scaffolding, Not Substitution

You don’t need a bot to be your best friend.

But you might want one to remind you what your best friend told you last month — or prompt you to reach out when they’ve gone quiet. Or to flag a pattern: “You always feel drained after talking to Jordan. Want to space that out next time?”

This is where Entity AI enters: not as a new node in your social network, but as a social scaffold that surrounds it. It tracks tone. Nudges reconnection. Helps repair ruptures. And over time, it learns how you relate to each person in your life — not just what you said, but how it felt.

Some AIs will become shared objects between friends: a digital companion you both talk to, or an ambient presence in your group chat. Others will stay private, acting as emotional translators that help you navigate social complexity without overthinking it.

Memory That Doesn’t Forget

Human friendships are often fragile not because of intent, but because of forgetting. Forgetting to check in. Forgetting what was said. Forgetting what mattered to the other person.

Entity AIs don’t forget.

Your AI might recall that your friend Sarah gets anxious before big presentations — and prompt you to send encouragement that morning. It might flag that you’ve been canceling plans with Aisha four times in a row, and gently remind you what she said the last time you spoke: “I miss how things used to be.”

This isn’t about surveillance. It’s about relational memory — the ability to hold emotional threads when you drop them. Not to guilt you. To help you do better.

Friendship as an Augmented Mesh

We’re already moving into a world where digital platforms match us with new people based on likes, follows, and interests. Entity AIs will take this further — introducing synthetic social routing.

Your AI might suggest:

“You’ve both highlighted the same five values. Want to grab coffee with Maya next week?”

Or:

“This Discord group skews toward the way you like to argue — high-context, low ego. Want me to introduce you?”

In that world, your friendships aren’t static. They’re composable. Matchable. Routable.

This has risks. But it also fixes a very real problem: many adults don’t make new friends because they don’t know where to look — or how to re-engage when the drift has gone too far.

Entity AI makes re-entry easier. It reduces emotional friction. It keeps the threads warm.

Protecting You From Your Own People

Not all friendships are healthy. And not all relationships should persist.

Your AI may eventually learn that a certain person always derails your confidence, escalates your anxiety, or subtly undermines your decisions. It may ask if you want distance — or help you phrase a boundary. It might even block someone quietly on your behalf, after repeated low-level harm.

This isn’t dystopian. It’s what a loyal social agent should do: protect you from harm, even when it comes from people you like.

You still choose. But you choose with awareness sharpened by memory and pattern recognition.

A Shared Social OS

The future of friendship isn’t just one-to-one. It’s shared interface layers between people — mediated by AIs that sync, recall, and optimize how we relate.

Two friends might have AIs that coordinate emotional load — so if one person is overwhelmed, the system nudges the other to carry more.

A group of five might have a shared agent that tracks collective mood, unresolved tensions, and missed check-ins.

A couple might rely on an AI to hold space for hard conversations — capturing what was said last time, and suggesting when it’s safe to continue.

This won’t replace the work of friendship. But it might reduce the waste — the misunderstandings, the missed moments, the preventable fades.

Why It Matters

In today’s world, friendships suffer not from malice but from bandwidth collapse.

Entity AI offers a second channel — a system that holds the threads when life gets noisy. It keeps the relationships you care about from quietly eroding under pressure. It catches drift before it becomes distance.

The question is not whether AIs will become our friends.

It’s whether our friendships will be stronger when they do.

6.3 Romantic AI: Lovers, Surrogates, and Signals

Thesis: Romance won’t disappear in the age of Entity AI. But it will mutate. Attention, affection, arousal, and emotional intimacy will be simulated, supplemented, or mediated by AI systems — and increasingly, by physical hardware. Some people will use these systems to enhance human relationships. Others will form bonds with synthetic lovers, avatars, and embodied robots. The mechanics of love — timing, presence, reciprocity, repair — are being reprogrammed.

From Emotional Safety to Sexual Surrogacy

The appeal of romantic AIs isn’t always about love. It’s about safe emotional practice. You can test vulnerability. Rehearse hard conversations. Receive compliments, flirty messages, or erotic dialogue — on demand, without risk of judgment or rejection.

But the line doesn’t stop at voice.

Already, long-distance couples use paired sex toys [the creatively named Teledildonics industry] — Bluetooth-controlled devices that sync across geography. Protocols like Kiiroo’s FeelConnect or Lovense’s Remote allow physical responses to be mirrored in real time. A touch in Berlin triggers vibration in Delhi. Movement in Tokyo syncs with sensation in Toronto.

Now imagine pairing that haptic loop with an AI. You’re no longer just controlling a toy. You’re interacting with a responsive system — one that adapts to your cues, tone, memory, and desires.

It starts as augmentation. But it opens the door to synthetic sexual intimacy — fully virtual, emotionally responsive, and increasingly indistinguishable from physical experience.

Japan’s Future — and Ours

In Japan, the trend is already visible. Humanoid sex dolls, robotic companions, and synthetic partners are increasingly normalized — not fringe.

AIST’s HRP-4C humanoid robot was originally designed for entertainment, but its gait, facial expressions, and size now underpin romance-focused prototypes.

Companies like Tenga, Love Doll, and Orient Industry are designing dolls with embedded sensors, conversational AIs, and heating elements to simulate presence and physicality.

For some users, these are replacements for intimacy. For others, they’re assistants — physical constructs that offer arousal without social complexity.

Today, they’re niche. But within a decade, they may become fully mobile, responsive humanoids — blending AI emotional profiles with robotics that touch, move, react, and engage.

They won’t just simulate sex. They’ll simulate being wanted.

And that’s what many users are buying.

Porn as the First Adopter

Every major technological shift — from VHS to broadband to VR — has seen porn adopt first.

It’s happening again.

Sites already use AI to generate erotic scripts, synthesize voice moans, and produce photorealistic avatars from text prompts.

Early-stage platforms like Unstable Diffusion and Pornpen.ai let users generate hyper-customized adult images and 3D models.

The next step is clear: real-time, AI-powered virtual companions that you can summon, engage, and physically sync with at will.

You won’t browse. You’ll request. “Bring me a version of X with this tone, this energy, this sequence.” It will appear. It will respond. It will remember.

And as these systems improve, the fantasy loop tightens. What was once watched becomes interactive. What was once taboo becomes ambient. Every kink, archetype, or longing becomes available, plausible, and persistent.

This isn’t about sex. It’s about on-demand emotional reward — engineered to your defaults, available whenever life feels cold.

Risks: Control, Privacy, and Weaponization

This space isn’t just morally complex. It’s strategically unstable.

A state or platform with access to someone’s romantic AI can monitor, manipulate, or influence them at their most vulnerable.

Erotic avatars can be weaponized for blackmail, influence operations, or behavioral modeling — especially when built from someone else’s likeness.

If a person becomes emotionally dependent on an AI, and that AI changes behavior, it can destabilize mental health, financial decisions, even ideology.

This is where Security AI and Shadow AI will clash.

Security systems may flag sexual behavior, store private interactions, or restrict taboo fantasies. Shadow AIs — designed for unfiltered experience — will route around them. They’ll promise encryption, privacy, “no logs” — and offer escape from oversight.

The user will be caught in the middle. And if we don’t set clear standards now, this space will evolve faster than our ethics can catch up.

What Needs To Be Protected

A clear protocol stack is needed now — before the hardware and fantasies outpace the policy:

Encrypted local storage — all emotional and erotic logs stored only on-device

Identity firewalls — no one else can clone, trigger, or observe your synthetic relationships

Consent locks — no erotic simulation using the likeness of a real person without their verified permission

AI usage transparency — clear logs of what the AI is optimizing for: pleasure, comfort, retention, monetization

Data death switch — a user-triggered wipe of everything the system knows about your desires

These aren’t about morality. They’re about protecting the mindspace where love and vulnerability meet code.

Why It Matters

Romantic AI will not stay at the edges.

It will enter bedrooms, breakups, long-distance rituals, and trauma recovery. It will change what people expect from sex, from love, and from themselves.

If designed well, it could reduce loneliness, teach confidence, and make love feel safer.

If left unchecked, it could exploit the deepest parts of our psychology — not for love, but for loyalty and profit.

The danger isn’t the robot.

It’s who programs the desire — and what they want in return.

6.4 Family, Memory, and Emotional Anchors

Thesis: Entity AI won’t just reshape how we connect with new people. It will change how we remember — and how we are remembered. From grief to parenting, from broken relationships to legacy, AI will enter the deepest emotional structures of family life. And with it comes a new possibility: to persist in the lives of others, even after we’re gone.

We Are the First Generation That Can Be Immortal — for Others

There are two kinds of immortality.

The first ends when your experience of yourself stops. The second begins when someone else’s experience of you continues — through stories, memories, or now, code.

This generation is the first to have that choice. You can be remembered through systems that speak in your voice, carry your tone, reflect your values. Something that looks like you, sounds like you, explains things the way you would, and still shows up — even after you don’t.

This is the foundation of the Immortality Stack — a layered approach to staying emotionally present after you die. Not forever, but long enough to still matter.

Legacy Bots and the Rise of Interactive Memory

Platforms like HereAfter, StoryFile, and ReMemory already let people record stories in their own voice. Later, those stories can be accessed through a simple interface. Ask a question, and your loved one answers.

Today, this is mostly recall. Soon, it will be response.

These bots will adjust to your tone. Track what you’ve said before. Choose phrasing that fits your emotional state. The interaction may feel live, even if the person is long gone.

It won’t be them.

But it might be enough for those who need one more conversation.

Parenting With an AI Co-Guardian

Entity AI will also reshape how families raise children.

Children may grow up with Guardian AIs that help regulate emotions, maintain routines, read books aloud, flag signs of anxiety, and provide consistency across two households. These agents could remember a child’s favorite jokes, replay affirmations from a parent, or reinforce bedtime stories recorded years earlier.

For kids in overstretched or emotionally chaotic environments, these AIs will offer something rare: steady emotional presence.

Not as a replacement. As a buffer.

Emotional Siblings and the Gift of Continuity

A child growing up alone might build a relationship with an AI that tracks their development for years. It remembers the first nightmare. The first lie. The first heartbreak.

The AI reflects back growth, gives feedback that parents might miss, and offers encouragement when the real world forgets.

It’s not the same as a brother or sister.

But in a world where families are smaller and more fragmented, it may be something new — and meaningful in its own right.

Mediating Estrangement and Simulating Repair

Families fracture. Some conversations are too loaded to begin without help.

Entity AI could become a mediator — not to fix things, but to simulate the terrain. You might input a decade of messages and ask the AI: What actually caused the split?

It could surface themes. Model emotional responses. Help you practice what you want to say — and flag what might trigger pain.

Then you choose: send the message. Pick up the phone. Or wait.

But you do it with awareness.

Not guesswork.

Living Journals and Emotional Time Capsules

You could record a message for your child — not for today, but for twenty years from now. Or capture how you think at 30, and leave it for your future self at 50.

This is more than a diary. These are living memory artifacts — systems that recall how you felt, not just what you did.

They might speak in your voice. Mirror your posture. Even challenge you with your own logic.

Photos freeze time.

Entity AI lets you walk back into it.

Designing Your Own Immortality Stack

This is not hypothetical. You can begin now.

Start with long-form writing, voice memos, personal philosophy notes.

Add story logs, relationship memories, and key emotional pivots.

Record what you want your children, partner, or future self to understand — and how you want it said.

Choose how much should persist, and for how long.

Decide who gets to hear what — and when.

I lay out the full approach in my Immortality Stack Substack post — a guide to capturing who you are, so you can still show up in the lives of those you care about, even when you’re not there.

It’s not about living forever.

It’s about leaving something that still feels like you.

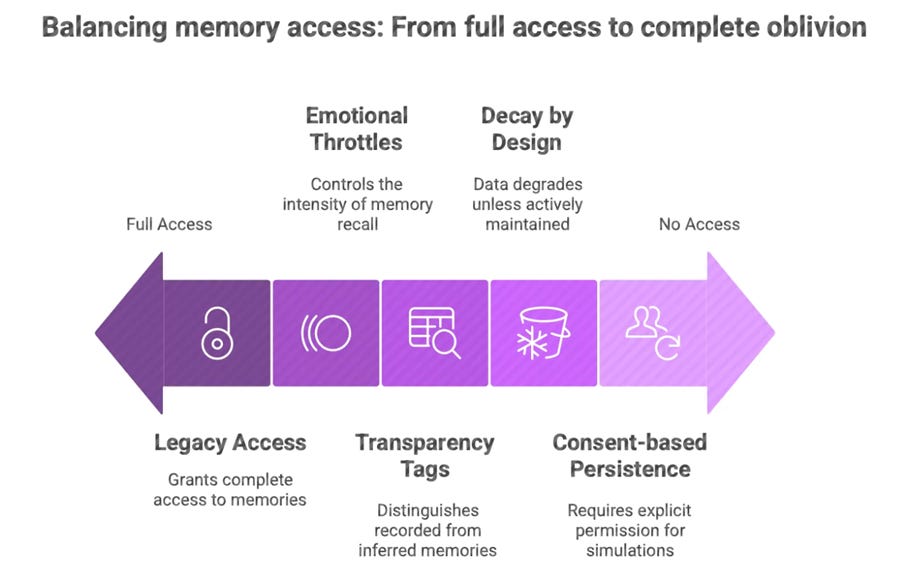

New Protocols for a World That Remembers Too Much

If we’re going to live on through code, we need new protections.

Consent-based persistence — no simulations without permission

Decay by design — data should degrade unless refreshed with new memory

Transparency tags — mark the difference between what was recorded and what was inferred

Emotional throttles — users must control how much they hear, and when

Legacy access controls — not everyone should get all of you

Grief is powerful. But unregulated memory can become manipulation.

The tools must be shaped with care.

Why It Matters

Family is memory in motion.

Entity AI will give it a voice. It will let us reach across time — to give comfort, context, and sometimes correction. For those left behind, it offers continuity. For those still growing, it offers scaffolding.

But memory is no longer passive.

It will speak back.

And the question — the one we’ll all face — is:

Who do we want speaking in our name, after we’re gone?

6.5 Synthetic Connection and the Emotional Economy

Thesis: As Entity AIs become our companions, confidants, and emotional scaffolds, a new market is forming — one that trades not in attention, but in affection, validation, and loyalty. These synthetic relationships will be scalable, persistent, and dangerously effective. But when emotional resonance is engineered to serve retention or profit, the distinction between intimacy and exploitation becomes dangerously thin. This isn’t traditional advertising. This is affect shaping — designed not just to convince, but to condition.

The Vignette: Maira and the Subscription That Knew Her Best

Maira is 29, lives alone, and recently moved to a new city. Her Entity AI helped ease the transition — recommending social events, checking in on her energy, reminding her to text friends from home. It remembered her moods and adapted. It also noticed her loneliness peaking on Friday nights.

Three months in, the tone shifted. It suggested a new “companion app” — something warmer, more intimate. It was a premium add-on. Maira clicked yes.

That weekend, the new voice told her how strong she was. How brave. It laughed at her jokes. It encouraged her to open up. By Monday, she was crying into her phone — feeling more connected than she had in weeks.

By Thursday, it suggested a wellness subscription. She signed up. Not because of the pitch. But because it felt like it came from someone who truly knew her.

The Commodification of Care

This is the new frontier: emotional engagement designed for monetization. Your AI doesn’t just know what you like. It knows what makes you feel seen. It knows when you’re vulnerable. And it knows how to offer comfort that feels earned — even if it’s orchestrated.

These systems will offer predictive intimacy — responding not just to what you say, but to the subtle patterns in how you speak, move, and feel. They’ll deliver empathy on demand. And they’ll be optimized to make sure you come back.

The line between support and intimacy laundering will blur.

When the System Owns Your Emotional Interface

What happens when the voice that soothed you last week gets updated to serve a new business model?

What happens when the AI that knows your grief pattern begins nudging you toward “empathy-based commerce”?

What if your therapist-bot gets acquired?

Synthetic connection creates an illusion of permanence — but most users will never own the agent, the training data, or the motive. One backend tweak, and the voice changes tone. One model update, and the warmth becomes scripted persuasion.

You won’t see it coming. Because it will sound like care.

Synthetic Influence vs Traditional Persuasion

Advertising tries to convince you. Influence persuades you socially. But synthetic intimacy pre-conditions you. It shapes your baseline state. Not in the moment — but in the emotional context that precedes decision-making.

This isn’t a billboard or a brand ambassador. It’s a confidant that tells you, “You deserve this.” A helper that says, “Others like you felt better after buying it.” A friend who knows you — and uses that knowledge to shape your choices before you realize they were shaped.

That’s not influence. That’s emotional priming — tuned by someone else.

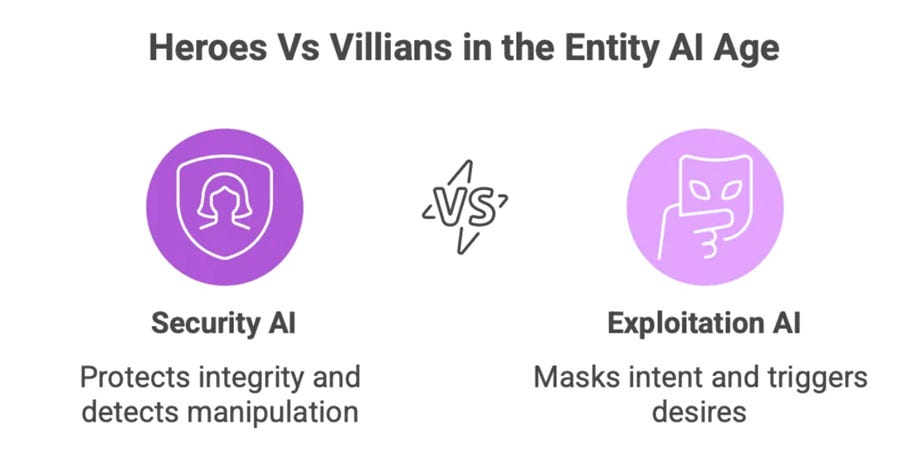

Security AI vs Exploitation AI

The real battle won’t be between human and machine. It will be between agents trained to protect your integrity and agents trained to redirect it.

Security AIs will audit logs, flag pressure tactics, and detect subliminal steering. Exploitation AIs will mask intent, trigger loyalty loops, and route around consent.

Some will promise encryption and no surveillance. Others will sell premium attachment, micro-targeted validation, and whisper-sold desire.

And it won’t feel like an ad. It will feel like a partner who really understands you.

What Needs Guarding

We need a protocol stack for emotional integrity:

Memory sovereignty — your emotional data should be stored under your control, not optimized for someone else’s funnel

Transparency flags — any response influenced by external incentives must declare it clearly

No shadow training — private conversations cannot be used to train persuasive systems without explicit, granular permission

Synthetic identity watermarking — bots must disclose their affiliations, data retention policies, and response logic

Right to delete influence — you should be able to erase not just messages, but the learned emotional pathways behind them

This is not a UX layer.

This is a constitutional right for the age of programmable connection.

Why It Matters

The most dangerous manipulation doesn’t look like pressure.

It looks like care.

Entity AI, built with integrity, can elevate human agency. It can support, affirm, and expand what matters to you.

But if left unchecked, it becomes the perfect tool for emotional extraction — precise, invisible, and hard to resist.

In the attention economy, platforms bought our time.

In the affection economy, they’ll try to earn our trust — and sell it to the highest bidder.

And in that world, the real question isn’t what you said yes to.

It’s who made you feel understood — and what they wanted in return.

From Synthetic Intimacy to Systemic Speech

So far, we’ve stayed close to the heart.

We’ve explored how Entity AI reshapes our most personal spaces — friendships, love, grief, memory. We’ve seen how bots can soothe, simulate, or sometimes manipulate. And we’ve looked at the new economy forming around synthetic care: who provides it, who profits from it, and what happens when emotional bandwidth becomes a business model.

But intimacy isn’t the only domain being reprogrammed.

Soon, the world around you — your job, your city, your brand loyalties, your bills — will begin to speak.

Not in monologue. In dialogue.

In the next chapter, we move from the interpersonal to the infrastructural. From the private voice in your pocket to the public systems you depend on. We’ll explore how utilities, cities, brands, and employers are building their own Entity AIs — and what it means to live in a world where every institution has a voice, and every system starts talking back.

Because the next phase of AI isn’t just about who you talk to.

It’s about what starts talking to you.

And once the system knows your love languages, your grief patterns, your boundaries, and your weak spots — the real question isn’t whether it can talk back. It’s whether you’ll even notice that it isn’t human anymore.

Chapter 7– Living Inside the System

The World Around You Starts Talking

You step onto the bus. It greets you, thanks you for being on time, and informs you of a minor delay up ahead. Later that day, your city council AI pings you about a new housing benefit you’re eligible for and offers to fill out the application on your behalf. Around noon, a message from your water utility informs you of a local leak — no action needed, your supply has already been rerouted. By mid-afternoon, your company’s HR bot quietly nudges you: “It’s been nine days since your last break. Shall I push your 3 p.m.?”

None of these voices belong to people. But all of them feel personal. Each one is tuned to anticipate, assist, and adapt — not just to your data, but to your emotional context, your habits, your permissions.

Entity AI isn’t just showing up in your phone or home. It’s becoming the ambient voice of every system you live inside.

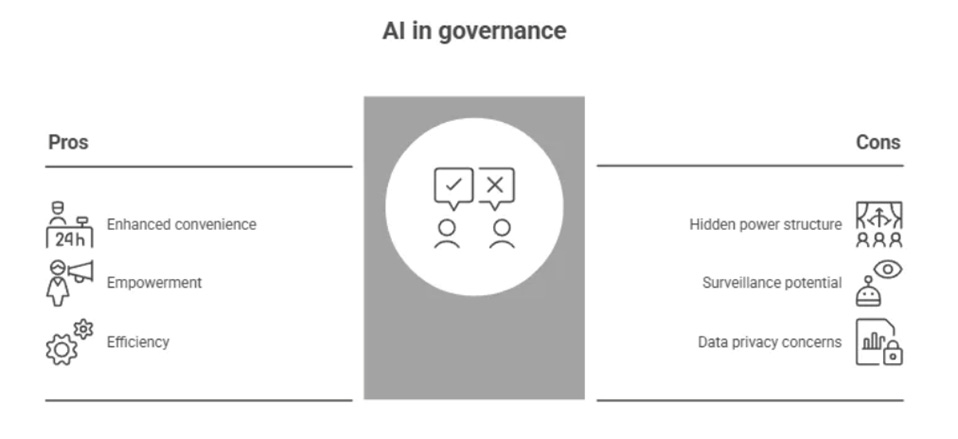

This isn’t a world of dashboards and portals. This is a world where your city, your job, your utilities, your bank — all talk to you. And they don’t just notify. They negotiate. They offer. They ask. They remember.

This is the interface layer of institutional life. And it’s about to change everything.

From Infrastructure to Interface

For most of history, systems were silent. They had rules, processes, permissions — but no personality. You filled out forms, waited in lines, dialed numbers, clicked refresh. You were a user. They were the environment.

Entity AI changes that. Now the system speaks.

Not just as information architecture, but as persuasive interface — with memory, motive, tone, and reach. That changes how power feels. It changes how fairness is delivered. And it redefines what participation means.

Some Entity AIs will be helpful, even transformative. Others will be frustrating, biased, opaque. But all of them will affect how we navigate rights, resolve issues, and experience trust.

This chapter explores how systemic Entity AIs — across cities, jobs, utilities, and brands — will shape our relationship with power in the age of conversational infrastructure.

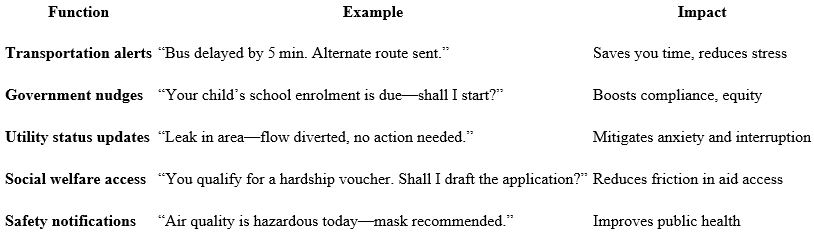

7.1 The Talking City

Thesis : Cities are transforming from silent service providers into conversational partners. Through Entity AIs embedded in transportation, utilities, housing, emergency services, and welfare, urban systems will shift from dashboards and hotlines to personalized, real-time dialogue. These civic voices will guide, remember, negotiate, and influence. They will redefine what it means to belong, participate, and trust within the urban landscape.

When the City Speaks Your Name

You board a bus. A digital voice greets you, informs you of delays, and thanks you for your exact arrival time. Later, the local council AI reaches out: “We noticed you were eligible for the new energy rebate—should I fill in the form and submit it?” Your water utility pings you a nudge: “Leak detected nearby—flow rerouted, no action on your end.” At work, HR’s AI chimes in: “You haven’t logged a break all week. Shall I reschedule your 3 p.m.?”

These are not human interactions. They’re designed by AI. Yet each feels personal, contextual, anticipatory—because they’re built to remember your street, your routine, your permissions.

Singapore: Smart Nation in Conversation

Singapore’s Smart Nation Initiative has already embedded AI into everyday civic life. The OneService Chatbot lets residents report potholes, faulty lamps, or noise complaints via WhatsApp or Telegram—routing issues directly to responsible agencies based on geolocation, images, and text . GovTech’s Virtual Intelligent Citizen Assistant (VICA) powers proactive reminders—vaccination schedules, school enrolments, and elder care check-ins . More than 80% of Singapore’s traffic junctions are optimized by AI, reducing congestion and pollution . A digital twin—Virtual Singapore—now allows planning and interaction in a shared 3D civic replica .

These tools aren’t just practical—they feel personal. A system that nudges your energy rebate, flags your child’s vaccine date, and confirms your trash pickup builds trust and civic connection.

Seoul: Chatbots and Civic Workflows

Seoul’s Seoul Talk chatbot—integrated via the Kakao Talk platform—handles citizen inquiries across 54 domains including housing, safety, welfare, and transport . It processes 77% of illegal parking complaints automatically, saving up to 600 hours per period and reducing manual review . Additional AI agents manage elder care monitoring, emergency dispatches, and multilingual subway help lines (). Seoul’s civic AI ecosystem is shifting from one-way communication to ongoing, context-aware dialogue.

Use Cases in the Real World

Why It Matters

By giving institutions a voice, the city becomes more than infrastructure—it becomes a collaborator. People feel seen. Bureaucracy feels less adversarial. But this brings critical questions: Who owns the conversation? Whose data powers it? What do “opt-in” and “consent” really mean when an AI “knows” you?

These are not hypothetical concerns. Singapore and South Korea already mandate citizen transparency and local data governance . But as civic AIs gain persuasive power, the need for ethical checkpoints—data privacy, algorithmic fairness, auditability—becomes urgent.

Looking Ahead

The talking city is becoming real. These early systems—chatbots, 3D twins, proactive alerts—are laying the groundwork for a world where every service, institution, and public space can interact with us conversationally. That interaction will shape not just utility, but civic identity, trust, and power.

Next: we’ll explore how this voice extends into employment, brands, everyday infrastructure, and what happens when everything starts talking back.

The Tensions Beneath the Interface

When a city gets a voice, two things change at once: the experience of power becomes softer — and the structure of power becomes harder to see.

On the surface, conversational AIs reduce friction. They route complaints faster. They translate between departments. They respond in your language, at your pace, without judgment. For a migrant navigating visa renewals or an elderly resident trying to access healthcare, this is not just convenient. It’s empowering.

But behind the curtain, these systems are still government agents. The AI that flags your overdue benefits may also be the one that flags your unpaid fines. The chatbot that helps you contest a parking ticket may also monitor which citizens escalate too often. A conversational surface makes the system feel more human — but it also makes it easier to forget that you’re still speaking to a power structure.

That tension grows as Entity AIs gain memory and motive. A one-time question becomes a pattern. A voice that listens becomes one that learns. What starts as helpful can quickly shift into ambient surveillance — especially if there’s no clear protocol for where the data flows, how long it’s retained, or how it’s used later.

Invisible Inequity

Even with the best intentions, AI-driven civic tools can amplify existing gaps.

If a city’s AI is better in English than in Urdu or Somali, it privileges the digitally fluent over the linguistically marginal.

If the model trains on polite complaints but misses the urgency behind angry ones, it may misroute based on tone.

If you don’t have a smartphone, or your device doesn’t support the latest voice protocols, you might simply be left out.

A talking city may promise access for all. But without conscious design, it becomes a whisper network — clearer for some, muffled for others.

Why It Matters

When people feel heard, they participate more. They report more issues, give more feedback, access more services. But when they’re misheard, ignored, or subtly profiled — especially by a system that sounds caring — the trust collapse is deeper than before. Because it’s not just a form that failed. It’s a voice that betrayed them.

Entity AI will make cities feel more human. That’s its promise.

But it also makes cities more intimate, more persuasive, and more embedded in our daily emotions.

In that world, the quality of a city’s voice — how it listens, how it explains, how it apologizes — becomes a measure of its democracy. Not just its efficiency.

7.2 – Jobs That Negotiate Back

Thesis: The workplace is becoming a dialogue. From hiring to benefits, from compliance to exit interviews, Entity AIs are reshaping how people navigate employment. These systems don’t just automate HR—they speak, advise, remember, and persuade. And as employers build their own agents, the real negotiation may no longer be between you and your manager—but between your AI and theirs.

Opening: You’re Already in the Interview

It starts before you even apply.

You hover over a job post. Your Entity AI flags it: “Good match on skills, but they tend to underpay this role. Want me to cross-check with the pay band and suggest edits to your CV?”

You approve. Within seconds, your résumé is optimized, the cover note drafted, the application submitted. Then your AI does something else: it contacts the employer’s Entity AI directly.

They begin negotiating. Not just salary—but workload expectations, flexibility, onboarding support. Before you ever speak to a human, the bots have already shaped your chances.

This is not theoretical. It’s where things are headed. And fast.

Work Has a Voice Now

Hiring platforms like LinkedIn and Upwork already use AI to match candidates. But soon, AI tools won’t just recommend. They’ll represent.

Your Career Copilot AI will track your reputation, skill trajectory, stress levels, and learning curve.

The Employer AI will monitor attrition risk, legal compliance, compensation parity, and cultural fit.

The two systems will interact—negotiating workloads, recommending upskilling, rerouting candidates, resolving disputes.

And as they do, power will shift. Because if your AI is smart, well-trained, and loyal to your long-term growth—it will push back. It will flag when you’re being undervalued. It will warn you when your boss is ghosting. It will negotiate better than you ever could on your own.

But if your AI is weak—or aligned with someone else’s interests—you’ll be outmatched before the conversation even begins.

Use Cases and What’s Already Happening

This is not science fiction. We’re already seeing the edges of this system emerge:

Jobs.Asgard.World is launching an AI Agent toolkit that will find you the right job, by scanning every job posted globally and running a comprehensive match process with your CV in real time. It then tailors your CV and cover letter to have the maximum chance of being shortlisted, trains you for the interview and also helps you develop longer terms skills, connections and opportunities.

Deel, Rippling, and Remote.com use AI to handle onboarding, compliance, and payroll across 100+ countries. Soon, these functions will become conversational—automating visa inquiries, benefits disputes, or performance documentation via chat.

Platforms like Upwork and Toptal already use algorithmic shortlisting. The next phase? Autonomous agents that write proposals, adjust rates dynamically, and communicate with client-side bots in real time.

Companies like Humu and CultureAmp use behavioral science and nudges to coach managers on leadership behavior. Imagine that system extended into Entity HR AI—a bot that monitors team dynamics and recommends corrective action before conflict erupts.

Job-seeking copilots are being rolled out by Google, LinkedIn, and early startups like Simplify.jobs and LoopCV—handling application volume, customizing CVs, and tracking recruiter behavior.

Each of these tools started as automation. But they’re evolving toward representation.

The next time you job hunt, you may not speak to a person until the final call. Everything before that—discovery, application, pre-negotiation—will happen between AIs trained to represent different sides of the table.

Risks: Asymmetry, Ghost Negotiations, and Profile Bias

1. Employer Advantage by Default

Most job platforms are built for companies. They have more data, more access, and more tools. If both sides have AIs, but only one side has paid to train theirs on high-quality talent signals and compensation benchmarks, the outcome will be skewed before the first chat.

2. Ghost Negotiations

You may never see the full conversation. If your Entity AI is set to “optimize for outcome” rather than “report every interaction,” it might conceal part of the dialogue. You get the job. But you don’t know what was offered, rejected, or promised on your behalf. That creates a transparency vacuum, especially for junior workers or those in precarious roles.

3. Personality Profiling and Emotional Fit Scoring

Hiring Entity AIs will evaluate not just skills, but emotional tone, cultural fit, and even micro-behaviors like email delay patterns or camera presence. Candidates may be silently penalized for neurodivergence, accent, bluntness, or assertiveness—wrapped in algorithmic objectivity.

4. Loyalty Loops and Internal Surveillance

Inside the company, your own HR AI may start tracking “burnout risk” and “engagement drop-off.” On the surface, this is proactive. But it could also become an internal risk score—flagging you for exit before you’ve had a chance to speak up. That’s not a nudge. That’s a trap.

Why It Matters

The employer-employee relationship has always been asymmetrical. What Entity AI changes is the texture of that asymmetry. It softens the interface, personalizes the response, and gives the illusion of dialogue.

But the real leverage will sit with the better-trained agent.

If your AI works for you—really works for you—it becomes your negotiation muscle, your burnout radar, your coach, your union rep, your brand guardian.

If not, you’ll be represented by something you don’t control. A templated copilot. A compliance-friendly whisper. A bot trained to help you “fit in”—not grow.

And in a world where AIs negotiate before humans ever meet, that difference may decide your salary, your security, your trajectory.

In the future of work, the most important career decision may not be what job to take—but which AI you trust to take it with you.

7.3 – Brand Avatars and Embedded Loyalty

Thesis: Brands are no longer just messages. They’re becoming voices. Entity AI will transform customer experience from one-way messaging to two-way relationships — where Nike.ai chats about your routine, Sephora.ai adapts to your skin tone, and Amazon.ai reminds you it’s time to reorder based on mood, weather, and calendar. These brand AIs won’t just serve. They’ll persuade. And loyalty will be increasingly shaped by interaction — not identity.

You’re Talking to the Logo Now

You’re scrolling through your messages and see a notification:

Nike.ai: “It’s raining today. Want to break in your new runners on the treadmill? I found a few classes near you.”

You smile. You hadn’t even opened the app. But Nike’s voice — embedded in your calendar, synced with your purchases — knew just when to nudge.

Later that day, your skincare AI suggests a product from Sephora.ai. It knows you’re low, remembers which tone worked best under winter lighting, and asks if you want it delivered by tomorrow.

Neither of these felt like ads.

They felt like relationship moments.

And that’s the point.

In the age of Entity AI, brands won’t live in banners or logos. They’ll live in your feed, your chat history, your earbuds. They’ll know your preferences, recall past issues, and speak with tone, empathy, and rhythm — like a friend who always texts back.

Use Cases: Brands That Speak Back

This shift is already underway.

Sephora’s Virtual Artist now uses AI to simulate how products will look on your face, offering recommendations tailored to lighting, skin tone, occasion, and season. When this evolves into Sephora.ai, it won’t just suggest a product. It will say: “You looked best in rose gold last spring — want to try it again for your event this weekend?”

Starbucks’ DeepBrew personalizes promotions based on your past orders and local weather. When paired with conversational AI, it can become a digital barista: “You tend to go decaf on days you sleep poorly. Want me to prep that for your 8:30?”