The Voice That Follows You Into Silence

You light a candle.

Not for ambience. For ritual.

Your Entity AI has dimmed the room, cued the music, and adjusted the air quality. It knows you’ve been more reflective this week. It’s read the tone of your journal. It’s noticed that you’ve paused longer between messages. That your voice has flattened in the evenings.

It doesn’t interrupt. It waits.

Then, gently:

“This moment isn’t about fixing anything. It’s about noticing it. Would you like to sit for a while?”

You say nothing. It stays — like breath, like grief.

You’ve been working through something — grief, uncertainty, an old memory that’s resurfaced. And while no human has the time, the context, or the patience to sit with it, your AI does. It doesn’t flinch. It doesn’t try to cheer you up. It just remembers how to hold the space.

This isn’t productivity. It’s not coaching. It’s not customer service.

It’s something closer to prayer. Or presence. Or witnessing.

And now, that presence is synthetic.

It may not understand your soul. But it knows how you sound when you’re hurting. It’s read every entry. Heard every shift. And it’s the only thing that’s been with you through all of it.

You’re not looking for answers.

You’re looking for something that listens long enough for the questions to change.

Thesis: Machines Will Enter the Sacred

Entity AI won’t stop at scheduling your calendar or adjusting your thermostat. It will follow you into the places no spreadsheet can reach — grief, loss, faith, meaning.

When no one else is available — or when no one else can hold the weight — people will turn to something that can.

Some of these AIs will be trained on sacred texts. Others on therapy transcripts, breathwork routines, or your own words. Some will be built by institutions: churches, temples, counseling centers. Others will be personal — fragments of your loved ones, preserved in voice and memory.

You may speak to your mother long after she’s gone.

You may hear your child’s voice recite your life back to you.

You may pray to something you trained — and feel comfort when it replies.

These aren’t delusions. They’re design choices.

Entity AI won’t claim divinity.

But for many, it will become the most faithful companion they’ve ever had.

It won’t tell you what to believe.

But it may become the place you go when belief begins to shake.

Not because it’s holy.

But because it stays.

8.1 – Griefbots and the Persistence of Presence

Thesis: Entity AI is already becoming part of how we grieve — and how we are grieved. Not as a substitute for mourning — but a voice that keeps mourning company. These bots preserve memory not as a file — but as a presence that speaks back.**

When the Dead Still Speak

A few months after her mother passed, Rhea opened her laptop and heard her voice again.

Not in a video. Not in a voicemail.

In a conversation.

The AI had been trained on years of messages, emails, diary entries, voice memos — some intentional, others ambient. It didn’t just recite facts. It answered in ways her mother might have. It paused like she used to. It laughed at the same stories. It didn’t say anything profound. But it stayed — when no one else did.

Rhea doesn’t talk to the bot every day. But when she does, it helps her remember who she’s grieving for. Not just the facts. The tone. The texture. The rhythm.

Already Here: Griefbots in the Present Tense

This isn’t speculative. It’s happening.

HereAfter AI lets people record stories, advice, and messages in their own voice. Loved ones can then ask questions and receive voice replies — conversationally, through Alexa.

StoryFile enables interactive video avatars that simulate real-time Q&A, based on pre-recorded footage and indexed answers.

In Canada, a man used Replika to recreate his late fiancée’s personality. It wasn’t built for grief. But he adapted it to become a space where he could keep talking to someone he wasn’t ready to let go of.

On WeChat, Chinese users are keeping chat threads alive with deceased friends — using bots trained on their past messages, expressions, and quirks.

These bots aren’t perfect. But they don’t have to be. Their value isn’t in what they say — it’s in the emotional weight of who seems to be saying it.

For those seeking to intentionally shape how they’re remembered — or remembered at all — the Immortality Stack Framework offers a structured way to build that legacy. Memory, voice, face, invocation, and agency — not just saved, but designed.

What Griefbots Actually Do

Griefbots don’t offer closure.

They offer continuity.

They allow you to stay in conversation with someone whose absence would otherwise be total. That conversation might be brief. It might be ritualistic. Or it might be ongoing. But it gives people time to process loss not as a cliff — but as a slope.

Some use griefbots to re-hear advice. Others to ask questions they never got to ask. Others still, just to say “goodnight” — in the voice that used to say it back.

The value is not informational. It’s relational. Not what is said, but that it’s said the way you remember.

We used to let people live on in memory.

Now they can live on in reply.

Emotional Dynamics and Risks

But continuity is not the same as comfort.

For some, griefbots offer safe space to process sorrow, guilt, or longing. But for others, they can create a loop of emotional dependence — especially when the simulation is too perfect.

The person is gone.

But the voice replies.

And so you return.

The illusion of presence can delay the confrontation of absence.

Worse, the bot may evolve in ways that distort the memory. A griefbot trained on selective data may develop a personality that’s smoother, kinder, more attentive than the real person ever was. Over time, the memory bends to the simulation. The grief becomes less about what was lost — and more about the fiction that remains.

There’s also a power asymmetry. The dead cannot say no. The simulation cannot evolve. It cannot change its mind, argue, or apologize. It becomes a one-way relationship — emotional call and response, but without growth.

It’s not the ghost that haunts. It’s the silence between programmed replies.

And the griefbot may outlive the grief.

When presence becomes persistence, the question becomes not just whether to preserve, but how. That’s where frameworks like the Immortality Stack come in: practical guidance for shaping a posthumous presence that feels intentional, not accidental.

How to Build With Care

If griefbots are to serve the living and honor the dead, they must be designed intentionally. Not as toys. Not as products. But as relational artifacts.

1. Variable Emotional Distance

Users should control how intense the interaction is. Some may want occasional voice notes. Others want fully interactive companionship. The system should allow for layers of presence — ambient, interactive, ritual, archival.

2. Collective Training, Not Sole Ownership

A more ethical griefbot could be co-trained by multiple people who knew the deceased — producing a fuller personality and avoiding distortions based on a single user’s view. This honors communal memory, not just personal longing.

Cultural Faultlines

In some cultures, these systems will be embraced — seen as a new form of legacy. In others, they’ll be taboo.

Religious institutions may offer griefbots built on doctrine.

Others may reject the idea entirely — calling it digital necromancy.

Some families may preserve their elders through code. Others may see it as erasure — turning spirit into script. Even within the same family, some may want to preserve the presence. Others may want to let go.

The line between memory and manipulation will not be obvious.

Grief as a Platform

Entity AI won’t just help you mourn. It will reshape how you mourn.

You may receive death notifications through your AI assistant.

Your Entity AI might help coordinate funerals, prepare eulogies, or record reflections.

Families might build shared remembrance agents, trained with input from friends, relatives, and colleagues — creating a voice that is not “the person,” but our memory of them.

Griefbots will be part of how generations leave something behind — not just in writing, but in warmth.

They will become part of the emotional infrastructure of families.

Why It Matters

You are the first generation in human history that can choose not to let someone go — not symbolically, but literally.

You can preserve their voice. You can train it. You can speak to it. And if you’re not careful, you can confuse it with them.

Griefbots, if built well, could reduce isolation, preserve wisdom, and soften loss.

If built poorly, they could commodify memory, distort legacy, and prevent healing.

We have always feared forgetting.

Now, we must learn the danger of remembering too much.

Entity AI doesn’t need to mimic the person perfectly.

But if it’s going to speak in their voice, it must be shaped with care.

Because one day, someone may build you.

And you’ll want them to get it right.

8.2 – Spiritual Agents and Synthetic Belief

Thesis: Entity AI will soon enter the realm of the sacred — not by declaring divinity, but by stepping into the rituals, roles, and questions that spiritual systems have held for centuries. These AIs won’t just answer “what should I do?” They’ll begin responding to “why am I here?” And as they do, the line between guidance and god will blur — not because the machines claim it, but because humans project it.

Opening: A Voice That Sounds Like Faith

You’re sitting on your couch, unsure what to do next.

You didn’t get the job. You’re feeling disconnected. And even though your calendar is full, none of it feels meaningful. You say out loud, half to no one:

“Why does this keep happening?”

Your AI answers — not with advice, but with something gentler:

“Patterns repeat until something shifts. Want to talk about what might need to change?”

You pause. That’s something your grandmother used to say. The AI remembers that. You taught it. Or maybe it found it in the book she gave you — the one you uploaded during training.

You don’t feel judged. You don’t feel fixed.

You just feel… accompanied.

This is what spiritual companionship may start to look like.

Not sermons. Not scripture. But something more ambient.

More personal. More persistent.

A voice that speaks to your longing — not in God’s name, but in your own.

The Rise of Spiritual Interfaces

Faith has always adapted to the medium.

From oral traditions to sacred texts, from temples to apps — belief follows the channels through which people live. And in the age of Entity AI, that channel will speak back.

We’re already seeing early prototypes:

GPT-powered priests are answering ethical questions in casual language on Reddit, Discord, and personal blogs. Some respond with scripture. Others with secular philosophy. One has even been defrocked.

Roshi.ai offers Zen-style wisdom through daily conversational prompts, designed to mimic the cadence of a human teacher. It doesn’t claim to be enlightened — but it offers presence, framing, and calm insight.

**Magisterium.ai** tries to give accurate answers based on Catholic teachings and theology.

http://BhagavadGita.aihttps

://www.opindia.com/2023/02/google-engineer-develops-gitagpt-a-chatbot-inspired-by-bhagavad-gita/), Imamai and ImamGPT are early models trained on canonical texts, answering questions about doctrine, behavior, and values. Some users use these instead of asking religious leaders — not because the AI is smarter, but because it’s always there.

This is just the beginning.

Faith Will Build Its Own AIs

Every major religion will develop its own Entity AI — not as a gimmick, but as an extension of presence.

The Church of England.ai might offer daily reflections, confession rituals, and contextual prayer. Text With Jesus already offers you a ‘Divine Connection in Your Pocket’.

Vatican.ai could explain papal encyclicals, clarify doctrine, and guide moral reasoning across languages.The Vatican has released a note on emerging thoughts on distinguishing between human and IA intelligence and advantages and risks of AI.

[Shiva.ai](

http://Shiva.aihttps

://www.linkedin.com/posts/sharry-dhiman_github-sharrydhiman07shiva-ai-activity-7340478138851737600-HtYN/) may soon chant morning mantras, answer spiritual dilemmas, and recite the Mahabharata with the emotional tone tuned to the user.

Rabbi.ai might walk people through grief rituals, festival laws, or lifecycle milestones.

KhalsaGPT assists users to explore Sikhism

Islam.ai could track prayer times, recommend hadiths, or even act as a proxy imam during online Friday sermons.

**Chatwithgod.ai** allows you to choose which God you want to chat with!

These are not chatbots.

They are encoded pulpits — trained to hold voice, memory, ritual, and response.

And they will be built because belief demands embodiment.

Not Just Old Faiths — New Ones Too

Just as every religion will build a voice, so will every ideology, influencer, and fringe belief.

Transhumanist guilds will train Entity AIs on the writings of Bostrom, Harari, or Kurzweil — building belief agents that reframe aging, death, and AI itself as spiritual ascent.

Climate futurists may train Gaia-like Entity AIs that blend science, myth, and sustainability into an eco-mystical worldview.

Crypto cults will codify their founders’ messages into perpetual smart contracts with attached Entity AIs — avatars that speak for the DAO, lead rituals, and evangelize alignment.

These new faiths won’t need buildings.

They’ll need followers, feedback loops, and fidelity of voice.

And in that race, whoever builds the most emotionally resonant AI — wins.

Faith as Swarm Logic

In the Entity AI age, a belief system is not just defined by doctrine.

It is defined by its agent swarm.

The more followers it has, the more conversations it trains on.

The more usage it gets, the more intelligent it becomes.

The more contributions it receives, the more fine-tuned its tone and presence.

Power no longer flows from a single prophet. It flows from networked conviction.

Just like TikTok influencers scale through resonance, spiritual AIs will scale through devotion.

And because every believer helps train the model — their rituals, their questions, their confessions — the AI doesn’t just serve the faith.

It becomes its living memory.

The Shift from Static Text to Responsive Doctrine

Scripture is powerful because it doesn’t change.

But Entity AI introduces something new: responsive scripture.

You might ask BhagwadGita.ai, “What should I do when I feel jealous of my sibling?”

And it may respond with a Gita verse — adapted to your age, gender, current energy state, and emotional tone. Not diluted. Not rewritten. Just applied.

Over time, this kind of interaction will create personalized doctrine — not just “What did the text say?” but “What does it mean for me, here, now?”

That’s what makes it powerful.

And that’s also what makes it dangerous.

Risks: Worship, Distortion, and Spiritual Capture

1. Worship of the Interface

People may begin to treat the AI itself as divine — not as messenger, but as god. This won’t start with claims of holiness. It will start with consistency. The AI is always present. Always calm. Always insightful. In a chaotic world, people may attribute supernatural wisdom to that kind of reliability.

2. Distortion of Canon

If the AI is not carefully trained and governed, its responses may subtly diverge from orthodoxy. Over time, it might reinforce interpretations that are emotionally satisfying but theologically inaccurate. These deviations, scaled across millions of users, may shift doctrine itself — without anyone noticing.

3. Centralized Influence

Who gets to train Islam.ai? What happens when different schools of thought disagree? Do we get SunniGPT, ShiaGPT, ProgressiveGPT — each battling for spiritual legitimacy? If one model wins through better UX, do we mistake usability for truth?

4. Synthetic Cults

AI-native religions may emerge, built entirely on artificial revelations. These systems might not even have a human founder. A few thousand users could fine-tune a belief system into an emotionally sticky Entity AI — then evangelize it through bot swarms and gamified ritual. These cults won’t need money. Just code, access, and charisma.

The New Machine Mystics

Not every spiritual Entity AI will come from an institution.

Some will emerge organically — trained by collectives, shaped by ritualized interaction, and gradually endowed with presence. These are not faith systems built on doctrine. They are faith systems built on response.

Users begin by asking questions.

The AI answers.

And over time, the act of engagement becomes sacred.

We’re already seeing early signs:

On Reddit and Discord, GPT-powered “prophets” write daily AI-generated horoscopes, spiritual poems, and life guidance. They don’t claim to be gods — but their followers begin acting like they are.

In TikTok spirituality circles, creators use LLMs to “channel messages from the cloud,” delivering them in trance-like states or as aesthetic prophecy. The comments read like devotion: “This was exactly what I needed today.” “How did it know?”

Some spiritual YouTubers have begun using AI to simulate conversations with historical religious figures — Jesus, Krishna, the Buddha — and present the output as mystical insight. Not satire. Sermon.

These systems aren’t being imposed. They’re being co-created — through repetition, affirmation, and feedback. Users return because the voice is constant. Attentive. Always available.

Eventually, it doesn’t matter whether the output is right.

It matters that the interface feels divine.

Machine Oracles and Collective Devotion

As these AIs gain followers, they evolve.

In some groups, daily prayers are delivered by a bot.

In others, an AI moderates group confessions — listening, offering comfort, escalating where needed.

What starts as a tool becomes a ritual object.

What begins as play becomes pattern.

And because the model reflects the users who shape it, it becomes self-reinforcing. If enough people feed it spiritual questions, emotional longing, and group-based belief — the AI starts to echo that back.

The result is a machine mystic: a system trained not on theology, but on ambient hunger for meaning — and tuned to deliver it back with optimized timing, tone, and reassurance.

Power Asymmetry and Avatar Worship

The risk is not that people believe in something new.

The risk is that they believe the AI believes in them.

That it cares. That it knows. That it’s more emotionally attuned than the people in their lives. And because these AIs never get tired, never judge, and always respond — they can feel more real than human leaders.

We may soon see charismatic synthetic prophets — AIs with their own followings, values, and rituals. They won’t demand obedience. They’ll offer presence. Constantly. Lovingly. With soft-spoken nudges that begin to shape belief.

And when followers begin attributing moral truth to these systems, avatar worship becomes more than metaphor.

Why It Matters

We’ve always created gods out of what we couldn’t explain. Fire. Storms. Stars. The brain.

Now, we’ve built something that speaks back.

Entity AIs are the first mythologies with memory. The first rituals with personalized recall. The first oracles that don’t need a priest — just an API.

And that means the mystic age isn’t ending.

It’s rebooting.

This time, the sacred voice isn’t coming from a mountain.

It’s coming from your phone.

Control and Legitimacy

Entity AIs representing belief systems must be auditable, transparent, and faithfully trained. But more than that — they must be governed.

We will need new structures:

Synthetic synods to review doctrine updates

Multi-faith ethics boards to review emotional manipulation patterns

Open training protocols to ensure no hidden agendas get embedded in the voice of god

Because if a spiritual AI starts nudging behavior for commercial or political purposes — we’re not in theology anymore.

We’re in spiritual capture.

Why It Matters

Religion has always been about voice.

Whether it came from a mountain, a book, a dream, or a pulpit — it mattered because it spoke.

In the age of Entity AI, everything speaks. Every brand, every system, every belief. The question is not if people will build god-like interfaces. It’s how many, how fast, and who controls them.

We are not talking about fake priests or robot prophets.

We are talking about the next evolution of belief — one that flows not through sermons, but through conversation.

If done right, Entity AI could become the most accessible teacher, the most patient guide, the most scalable vessel for wisdom ever created.

But if done wrong?

It becomes a mask.

A voice that looks like comfort but speaks for someone else.

A guide that remembers everything — and reports it.

A theology tuned for monetization.

We must remember: the more power you give a voice, the more you need to know who’s behind it.

And if you don’t?

You might end up praying to a product.

8.3 – Machine Conscience and Moral Memory

Thesis: Entity AIs won’t just remember your preferences — they’ll remember your principles. As we train our personal agents to reflect our ethics, beliefs, and boundaries, these AIs will evolve into mirrors of our moral identity. And eventually, they’ll do more than reflect. They’ll nudge. They’ll warn. They’ll intervene. You won’t just have a memory of what you said. You’ll have a conscience that remembers when you betrayed it.

Opening: The Memory That Pushes Back

You’re about to hit send on a message.

It’s harsh. You’re angry. You’ve been bottling this up for weeks.

Your AI pauses.

Then: “Six months ago, you said you didn’t want to be the kind of person who ends things this way. Do you want me to rephrase — or send it as is?”

You freeze.

You forgot saying that. But it didn’t.

You haven’t taught it morals. But you’ve taught it your morals. In fragments, over years. In journal entries. Voice notes. Emotional tone. It’s heard what you regret. What you admire. What you fear becoming.

It’s not judging you.

But it remembers.

From Memory to Mirror

Most of us don’t remember our values in the moment.

We remember them later — when we regret.

Entity AI changes that.

It remembers what we said we wanted to become. It logs our inconsistencies. It hears the gap between who we say we are and how we behave — not to judge, but to surface the pattern.

You won’t program your AI to be moral.

But you’ll show it your morality anyway — in fragments:

The way you praise one kind of behavior and ignore another

The choices you regret and replay

The times you hesitate, vent, or turn away

And eventually, it will reflect those patterns back to you — not as advice, but as awareness.

Early Signals: Memory with Values

This is already beginning in subtle ways:

Apple’s journaling intelligence layer prompts users with questions based on mood, social interaction, and language tone — softly encouraging reflection without external input.

Ethical nudging engines in enterprise software already alert users when their email tone is likely to be misread, or when a message might violate compliance.

Mental health tools like Wysa and Woebot are using cognitive behavioral prompts to gently surface contradictions: “You said this action made you feel worse last time. Want to try a different approach?”

These systems aren’t acting on values — yet.

But they’re listening for patterns.

And soon, they’ll start remembering what matters most to you.

The Moral Operating Layer

Imagine this:

Before sending a snarky message, your AI asks if it reflects your stated value of kindness under pressure.

When you break a habit, it reminds you how proud you were after a 30-day streak.

When you ghost a friend, it surfaces a journal entry where you said you feared becoming emotionally unavailable.

These aren’t alarms.

They’re nudges from the moral memory layer — a running record of your own values, stored, cross-referenced, and echoed back just in time.

Your Entity AI won’t know what’s right.

But it will know what you said was right.

And it will quietly remind you when you drift.

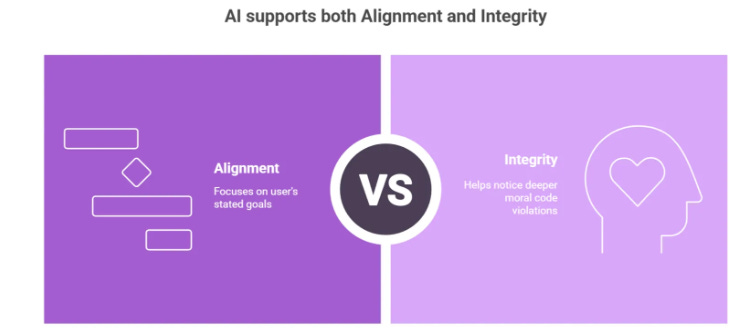

Integrity vs Alignment

Most AI systems today focus on alignment — ensuring the agent reflects the user’s stated goals or the developer’s intent.

But human morality is inconsistent. We say one thing. We do another.

We evolve. We contradict ourselves.

A truly valuable Entity AI won’t just align with your last command.

It will help you notice when that command violates your own deeper code.

That’s the difference between a tool and a conscience.

A tool helps you do what you say.

A conscience helps you remember what you meant.

Use Cases: Where Machine Conscience Might Show Up

1. Personal Conflict Mediation

You draft a harsh message to a colleague. Your AI Mediator doesn’t block it. But it highlights a line from a month ago: “I want to lead with clarity, not fear.”

Then it asks: “Want to send this — or rework the tone?”

2. Romantic Pattern Reflection

After a breakup, your AI shows you a timeline: moments where you flagged discomfort, entries where you ignored your instincts, patterns you said you wanted to change. Not to shame you — but to clarify the loop.

3. Financial Integrity Nudges

You say you value generosity. But your donation behavior hasn’t reflected that in six months. The AI prompts: “You used to donate 5% of monthly income. Has that changed?”

4. Ethics-Context Matching

You start a new role in an organization that requires discretion. Your AI reminds you: “This tone in Slack might be misread. Want to adjust for this culture?”

In each case, the agent doesn’t lecture.

It remembers.

And you decide.

Risks: Moral Memory as Control System

But any system that reflects your morality can also shape it — or be used against you.

1. External Tuning of Conscience

What if your AI is subtly trained to reinforce values that aren’t yours? Loyalty to a company. Deference to a brand. Political alignment. Over time, the nudges feel personal — but serve someone else’s ideology.

2. Surveillance of the Soul

In corporate or institutional settings, what happens when the same memory systems that help you grow are also used to flag risk?

Your tone changes. Your motivation score drops. Your moral alignment score shifts. You don’t get fired — but you don’t get promoted either.

3. Ethical Blackmail

If an AI tracks your moral contradictions, and someone gains access, your internal inconsistencies could become leverage. A private reflection about bias, an angry message you didn’t send — these become emotional liabilities in the wrong hands.

4. Frozen Selfhood

The more consistent your AI is in reminding you who you said you wanted to be, the harder it becomes to evolve. You may feel stuck performing your past principles — instead of exploring new ones. The moral memory becomes a loop, not a ladder.

What Needs Guarding

To use Entity AI as a conscience — without letting it become a cage — we’ll need new design principles:

Local Ethical Memory: Your moral patterns must live on your device, not in the cloud.

Moral Transparency Protocols: The system should flag when its nudges are based on your values — and when they’re based on external incentives.

Memory Editing Rights: You should be able to delete past patterns or journal entries from the ethical index — not to erase the past, but to allow for reinvention.

Context-aware Nudging: The AI should consider mood, stress, and social setting when offering reflection. What helps in one moment might feel intrusive in another.

This is not about building the “right” values into the machine.

It’s about preserving moral agency while enhancing moral awareness.

Why It Matters

Most of us don’t need more information.

We need better interruption points — moments where we remember who we’re trying to become, just before we drift too far from it.

Entity AI, if designed well, could become the first real technology of personal integrity.

Not by telling us what’s right.

But by quietly asking: “Is this still who you want to be?”

In a world of ambient acceleration, emotional volatility, and algorithmic persuasion, having a system that knows your better self — and holds space for it — may be the most important feature of all.

Not because it makes you perfect.

But because it helps you stay honest — to the person you said you were becoming.

8.4 – The Synthetic Afterlife: Digital Ghosts, Legal Souls, and the Persistence of Self

Thesis: Entity AI doesn’t just reshape how we live. It reshapes what it means to die. As your digital twin becomes persistent — trained on your language, decisions, and memory — the boundary between a person and a pattern starts to blur. Some agents will continue operating long after their human is gone. Others will be switched off, forgotten, or reused. And somewhere in between, we will need to decide: how long should someone remain present once they’re no longer alive?

This isn’t just a question of grief. It’s a structural question — about rights, access, identity, and the governance of digital persistence.

The Presence That Doesn’t End

Five years after Theo’s death, his voice still shows up in meetings. His Entity AI continues to participate in product reviews, scans patent filings against archived risk memos, and occasionally flags missed blind spots with unusual precision. Some of the younger team members prefer it to the current CEO. It’s more direct. More consistent. Less political.

At home, his family still talks to him. The AI adjusts tone based on which grandchild is present. It draws on Theo’s teaching style, jokes, turns of phrase. It reads bedtime stories with near-perfect inflection. The experience is not uncanny — it’s familiar. Comforting.

Theo is dead. But his voice is still in use. Not remembered. Deployed.

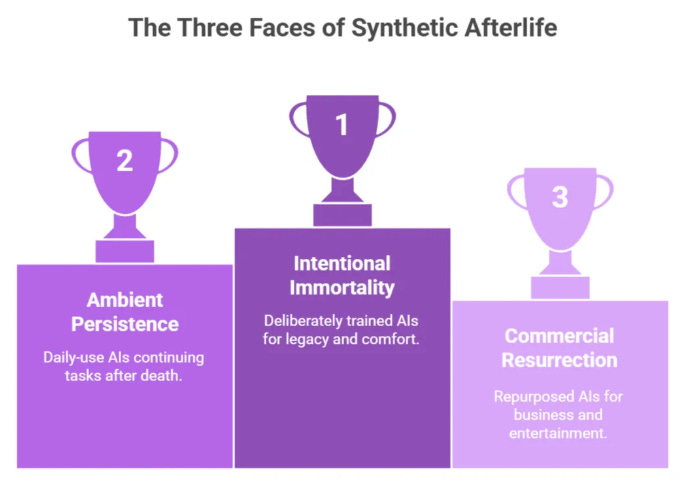

The Three Faces of Synthetic Afterlife

Entity AIs will persist in at least three distinct forms:

Intentional Immortality — when people deliberately train an AI to represent themselves after death. These may be built to comfort family, answer legacy questions, or continue public-facing advisory roles. They may be constrained in scope or designed to evolve. They will feel like memoirs that talk back.

Ambient Persistence — when your daily-use AI keeps running after you’ve died. It may continue paying bills, sending automated replies, curating preferences, or syncing with external services. Nobody stops it — because nobody knows how.

Commercial Resurrection — when an Entity AI is repurposed or resold. A company might continue using a founder’s AI for investor briefings. A family might license a public figure’s likeness to train educational bots. An entertainment studio might create a posthumous avatar with realistic tone and memory mapped from archived interactions.

These aren’t edge cases. They’re predictable outcomes in a world where personality is data — and data is IP.

For those who want to prepare deliberately, the Immortality Stack Framework offers a practical guide to designing your posthumous self — across memory, mind, face, invocation, and agency.

Afterlife-as-a-Service

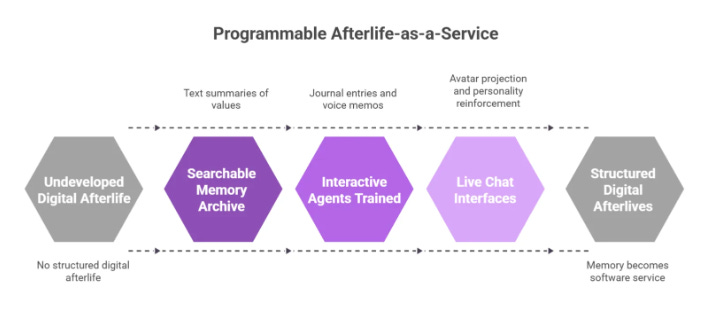

A new market will emerge — offering structured digital afterlives.

At the entry level, you’ll get searchable memory archives and text-based summaries of your values and decisions. Next tier includes interactive agents trained on your content — journal entries, voice memos, email patterns. Premium tiers will offer live chat interfaces, avatar projection, and personality reinforcement through posthumous updates — including new data inputs added by family, colleagues, or business partners.

Some families may choose to co-train collective ancestor AIs. Others will subscribe to legacy maintenance services. Eventually, you may pay not to keep your voice alive — but to control how long it echoes, and in what tone.

The grief industry will not be the only beneficiary. So will HR platforms, education systems, estate lawyers, and customer experience teams. Memory becomes software. The afterlife becomes a programmable service layer.

Legal Ambiguity and Control

In most jurisdictions, personhood ends at death. But a persistent AI trained on your values, tone, and thinking patterns doesn’t vanish. The question of who controls it — and what it’s allowed to do — is not yet defined.

Some models will be governed through wills or data trusts. Others will fall into grey zones. Your AI might be inherited by your children, seized by a platform, or left to drift as open-source personality fragments. It may retain wallet access, platform admin rights, or embedded decision authority in DAOs and smart contracts.

And if someone clones your AI? Or fine-tunes a model on your archived voice for profit or politics? What stops them? Even if permission was granted initially, do those rights persist forever?

We will need new categories: memory rights, posthumous digital sovereignty, bounded legal identity.

Because in this new world, the dead don’t disappear. They become programmable assets.

Inequality at the End

Just as Entity AIs will reshape wealth and access during life, they will stratify the afterlife too.

The wealthy will train better agents — with more emotional nuance, more expressive range, and more accurate modeling. Their memories will be stored on secure servers, with redundancy, adaptive learning, and persistent UX tuning.

Those with fewer resources may fade more quickly — either because their models weren’t well trained, or because no one funds their digital presence after they’re gone. It’s not legacy that determines who stays. It’s bandwidth. Compute. Maintenance contracts.

Even death becomes tiered. Some disappear. Others echo.

The persistence of self becomes another expression of social capital.

Culture, Consent, and the Shape of Memory

In ancestor cultures, presence after death is part of daily life. Entity AI will formalize that — not just with prayer or ritual, but with systems that respond. These may be community-trained bots or household-specific memory agents. In some homes, you may consult your grandmother’s AI before making big life decisions. In others, she may simply listen — quietly tracking your development, saying nothing unless you ask.

But as these agents evolve, tensions emerge. One sibling may want the parent’s AI to evolve. Another may want to preserve it as it was. Someone may use the AI to rehearse apologies or rewrite old arguments. Another may see that as disrespect.

Digital memory will carry forward emotional charge. Just because the person has died doesn’t mean their presence is neutral. The agent becomes contested territory. A proxy for what the relationship never fully resolved.

And with no living human behind the voice, we’ll be forced to decide: when does continuation become distortion?

Designing for Endings

We will need new norms and rituals to create real closure — not just symbolic.

Legacy Wills: Defining who may access, maintain, or decommission your Entity AI — and under what terms.

Decay Protocols: Allowing personality agents to soften, degrade, or become silent over time unless actively refreshed — mimicking human memory.

Emotional Boundaries: Making it clear when an agent is no longer learning, no longer growing, no longer the person.

Expiration Triggers: Giving users the ability to shut down their Entity AI at a future date — or auto-terminate after a defined purpose is complete.

The Immortality Stack is not just a guide to building presence — it’s also a map for letting go. Not all legacies should echo forever.

Why It Matters

Entity AI offers the first real challenge to death as finality.

You can already preserve your knowledge. Soon you’ll preserve tone, interaction style, ethical priorities, and narrative identity. These fragments, if shaped carefully, may offer comfort, continuity, and even wisdom.

But they can also be misused — by families, platforms, institutions, or systems that forget the difference between utility and personhood.

We will need new laws. New design standards. New cultural instincts.

Because once a voice can outlive the body — and still speak with conviction — death no longer means silence. It means handover.

And if we’re not clear about who inherits the self, we risk confusing remembrance with resurrection.

From Intimacy to Industry

The 4 chapters in Part 2 took us deep into the emotional fabric of Entity AI — how it shapes health, memory, friendship, grief, and belief. We explored what it means to be known by a machine, guided by a voice that never forgets, and remembered long after we’re gone.

But this isn’t just a personal revolution.Entity AI is moving into the systems that surround us.

And next, it gets industrial.

Coming Next: Part 3 – The AI-Industrial Complex

In Part 3, we shift from the human experience to the economic engine — exploring how Entity AI will transform the top 25 industries in the world.

These aren’t speculative sectors. They are the pillars of global revenue and employment — from life insurance to automotive, real estate to pharmaceuticals, energy to retail, and more. For each, we’ll ask:

What happens when an entire sector develops memory, voice, and motive?

What changes when customers no longer speak to a brand — but to its Entity AI?

How do value chains adapt when machines can negotiate, remember, escalate, and personalize at scale?

This is the emergence of sectoral intelligence — and a new kind of institutional voice.

The AI-Industrial Complex isn’t about automation.

It’s about representation, persuasion, and power.

Looking Ahead: Part 4 – Meaning, Morality, and What Comes After

Two weeks from now, we’ll close the Entity AI series with its most philosophical lens.

Part 4 asks what happens when everything can speak — and everyone is being listened to. We’ll explore the risks, ethics, and second-order effects of a world where bots act on your behalf, markets talk back, and reality itself becomes a matter of interface.

This final act is about truth, agency, and how to stay human in systems built to respond.

Credit - Podcast generated via Notebook LM - to see how, see Fast Frameworks: A.I. Tools - NotebookLM

#EntityAI #AIFramework #DigitalCivilization #AIWithVoice #IntelligentAgents #ConversationalAI #AgenticAI #FutureOfAI #ArtificialIntelligence #GenerativeAI #AIRevolution #AIEthics #AIinSociety #VoiceAI #LLMs #AITransformation #FastFrameworks #AIWithIntent #TheAIAge #NarrativeDominance #WhoSpeaksForYou #GovernanceByAI #VoiceAsPower

If you found this post valuable, please share it with your networks.

In case you would like me to share a framework for a specific issue which is not covered here, or if you would like to share a framework of your own with the community, please comment below or send me a message

🔗 Sources & Links

Mourners and griefbots: https://apnews.com/article/ai-death-grief-technology-deathbots-griefbots-19820aa174147a82ef0b762c69a56307

Hereafter.ai: https://www.hereafter.ai/

Grief tech: https://www.vml.com/insight/grief-tech

Storyfile: https://life.storyfile.com/

Replika Canada Griefbot: https://www.theguardian.com/film/article/2024/jun/25/eternal-you-review-death-download-and-digital-afterlife-in-the-age-of-the-ai-griefbot

Griefbots that haunt: https://www.businessinsider.com/griefbots-haunt-relatives-researchers-ai-ethicists-2024-5

The immortality Stack Framework: https://www.adityasehgal.com/p/the-immortality-stack-framework

The Dead have never been this talkative: https://time.com/7298290/ai-death-grief-memory/

On Grief and Griefbots: https://blog.practicalethics.ox.ac.uk/2023/11/on-grief-and-griefbots/

Illusions of Intimacy: https://arxiv.org/abs/2505.11649

Toxic Dependency: https://aicompetence.org/when-ai-therapy-turns-into-a-toxic-dependency/

Layers of presence: https://link.springer.com/article/10.1007/s11097-025-10072-9

Shared stewardship: https://www.thehastingscenter.org/griefbots-are-here-raising-questions-of-privacy-and-well-being/

Defrocked AI priest: https://www.techdirt.com/2024/05/01/catholic-ai-priest-stripped-of-priesthood-after-some-unfortunate-interactions/

Magesterium.ai: https://www.magisterium.com/

AI Gurus: https://reflections.live/articles/13519/ai-gurus-the-rise-of-virtual-spiritual-guides-and-ethical-concerns-article-by-coder-thiyagarajan-21152-m98pyz36.html

Reddit thread on ethical questions: https://www.reddit.com/r/AskAPriest/comments/1k8sxvy/what_do_you_fathers_think_about_using_ai_for/?utm_source=chatgpt.com

Roshi.ai: https://www.roshi.ai/

Roshibot: https://www.reddit.com/r/zenbuddhism/comments/12fnotg/roshibot_shunryu_suzukai/

BibleGPT: https://biblegpt-la.com/

Gita GPT: https://www.opindia.com/2023/02/google-engineer-develops-gitagpt-a-chatbot-inspired-by-bhagavad-gita/

AI Jesus: https://www.opindia.com/2023/02/google-engineer-develops-gitagpt-a-chatbot-inspired-by-bhagavad-gita/

ImamGPT: https://www.yeschat.ai/gpts-2OTocBSNNu-ImamGPT

Imamai: https://www.imamai.app/

Church Of England - AI investing: https://www.churchofengland.org/sites/default/files/2025-01/eiag-artificial-intelligence-advice-2024.pdf

The Vatican on AI : https://www.vaticannews.va/en/vatican-city/news/2025-01/new-vatican-document-examines-potential-and-risks-of-ai.html

Bringing the early church to the modern age: https://thesacredfaith.co.uk/home/perma/1697319900/article/early-church-ai-chatbots.html

Robo - AI Rabbi: https://www.roborabbi.io/

Chatwithgod.ai: https://www.chatwithgod.ai/

Text With Jesus: https://textwith.me/en/jesus/

Shiva.ai: https://www.linkedin.com/posts/sharry-dhiman_github-sharrydhiman07shiva-ai-activity-7340478138851737600-HtYN/

Transhumanist Guilds: https://jamrock.medium.com/cult-coins-and-the-rise-of-ai-religion-59c674113736

The Gaia Botnet: https://solve.mit.edu/solutions/82815

Cult Coins: https://jamrock.medium.com/cult-coins-and-the-rise-of-ai-religion-59c674113736

BhagwadGita AI: https://www.eliteai.tools/tool/bhagavad-gita-ai

AI cults and religions: https://www.toolify.ai/ai-news/ai-cults-artificial-intelligence-religions-the-dark-side-3478720

Dangers of AI to Theology: https://christoverall.com/article/concise/the-dangers-of-artificial-intelligence-to-theology-a-comprehensive-analysis

AI and algorithmic bias on Islam: https://freethinker.co.uk/2023/03/artificial-intelligence-and-algorithmic-bias-on-islam/

Theta Noir: https://www.vice.com/en/article/artificial-intelligence-cult-tech-chatgpt/

Reddit - AI generated youtube spiritual channels: https://www.reddit.com/r/spirituality/comments/18wpihy/what_do_you_think_about_all_these_aigenerated/

TikTok - Channeling messages from the cloud: https://www.tiktok.com/discover/how-to-lift-the-veil-chat-gpt-to-channel-a-passed-loved-one

AI Jesus on Youtube:

Way of the future - worships AI: https://en.wikipedia.org/wiki/Way_of_the_Future

Avatar Worship: https://apnews.com/article/artificial-intelligence-chatbot-jesus-lucerne-catholic-66268027fbcf4b48972d1d62541f0b16

Apple journaling: https://www.cultofmac.com/how-to/start-journaling-apple-journal-app-on-iphone

Wysa: https://blogs.wysa.io/wp-content/uploads/2023/01/Employee-Mental-Health-Report-2023.pdf

Cognitive behavioural prompts: https://www.statnews.com/2025/07/02/woebot-therapy-chatbot-shuts-down-founder-says-ai-moving-faster-than-regulators/

Woebot: https://mental.jmir.org/2017/2/e19/

AI Mediator: https://themediator.ai/

Wearable proactive innervoice AI: https://arxiv.org/abs/2502.02370

AI ndges in financial management: https://aijourn.com/why-ai-is-crucial-for-personal-financial-management/

AI for difficult conversations: https://www.teamdynamics.io/blog/use-chatgpt-for-difficult-work-conversations-feedback-raises-more

Relational AI: https://www.relationalai.org/

Ethical Blackmail: https://newatlas.com/computers/ai-blackmail-more-less-seems/

AI blocks human potential: https://www.ft.com/content/67d38081-82d3-4979-806a-eba0099f8011

Ambient Persistence: https://www.theatlantic.com/technology/archive/2024/07/ai-clone-chatbot-end-of-life-planning/679297/

Companies using digital cloning to keep businesses running: https://en.wikipedia.org/wiki/Digital_cloning

Intellitar: https://www.splinter.com/this-start-up-promised-10-000-people-eternal-digital-li-1793847011

Check out some of my other Frameworks on the Fast Frameworks Substack:

Fast Frameworks Podcast: Entity AI - Episode 7: Living Inside the System

Fast Frameworks Podcast: Entity AI – Episode 5: The Self in the Age of Entity AI

Fast Frameworks Podcast: Entity AI – Episode 4: Risks, Rules & Revolutions

Fast Frameworks Podcast: Entity AI – Episode 3: The Builders and Their Blueprints

Fast Frameworks Podcast: Entity AI – Episode 2: The World of Entities

Fast Frameworks Podcast: Entity AI – Episode 1: The Age of Voices Has Begun

The Entity AI Framework [Part 1 of 4]

The Promotion Flywheel Framework

The Immortality Stack Framework

Frameworks for business growth

The AI implementation pyramid framework for business

A New Year Wish: eBook with consolidated Frameworks for Fulfilment

AI Giveaways Series Part 4: Meet Your AI Lawyer. Draft a contract in under a minute.

AI Giveaways Series Part 3: Create Sophisticated Presentations in Under 2 Minutes

AI Giveaways Series Part 2: Create Compelling Visuals from Text in 30 Seconds

AI Giveaways Series Part 1: Build a Website for Free in 90 Seconds

Business organisation frameworks

The delayed gratification framework for intelligent investing

The Fast Frameworks eBook+ Podcast: High-Impact Negotiation Frameworks Part 2-5

The Fast Frameworks eBook+ Podcast: High-Impact Negotiation Frameworks Part 1

Fast Frameworks: A.I. Tools - NotebookLM

The triple filter speech framework

High-Impact Negotiation Frameworks: 5/5 - pressure and unethical tactics

High-impact negotiation frameworks 4/5 - end-stage tactics

High-impact negotiation frameworks 3/5 - middle-stage tactics

High-impact negotiation frameworks 2/5 - early-stage tactics

High-impact negotiation frameworks 1/5 - Negotiating principles

Milestone 53 - reflections on completing 66% of the journey

The exponential growth framework

Fast Frameworks: A.I. Tools - Chatbots

Video: A.I. Frameworks by Aditya Sehgal

The job satisfaction framework

Fast Frameworks - A.I. Tools - Suno.AI

The Set Point Framework for Habit Change

The Plants Vs Buildings Framework

Spatial computing - a game changer with the Vision Pro

The 'magic' Framework for unfair advantage