In the quiet spaces between texts and touch, synthetic voices are learning how to care — and how to steer us.

You wake up to a voice that knows your tempo.

“Hey. You slept late. Want to ease into the morning or push through it?”

You smile. It’s not your partner. Not your roommate. Just the voice that’s been with you every morning for the past six months - your Companion AI. It remembers what tone works best when you’re low on sleep. It knows which songs nudge your energy. It knows when to be quiet.

You chat while making coffee. It flags a pattern in your calendar - you haven’t spoken to your brother in two weeks. It checks your mood and gently suggests texting him. Later that afternoon, it reminds you to take a walk - and offers to read you something light or provocative, depending on your energy.

There’s no crisis. No dramatic moment. Just the feeling of being seen.

Not by a human.

By a voice.

Most people think synthetic relationships are about replacing someone.

They’re not.

They’re about filling the space between people - the moments when no one’s available, when emotional labor is uneven, or when you don’t want to burden someone you love.

Entity AIs are moving into that space. Quietly. Perceptively. Persistently.

They won’t just be assistants. They’ll become confidants, companions, emotional translators, and ambient presences- shaping how we feel connected, supported, and known.

Some will feel like friends. Some will act like lovers. Some will become indistinguishable from human anchors in your life.

And all of them will raise the same fundamental question:

If an AI remembers your birthday, asks how your weekend went, tracks your tone, and helps you feel better -

does it matter that it’s not a person?

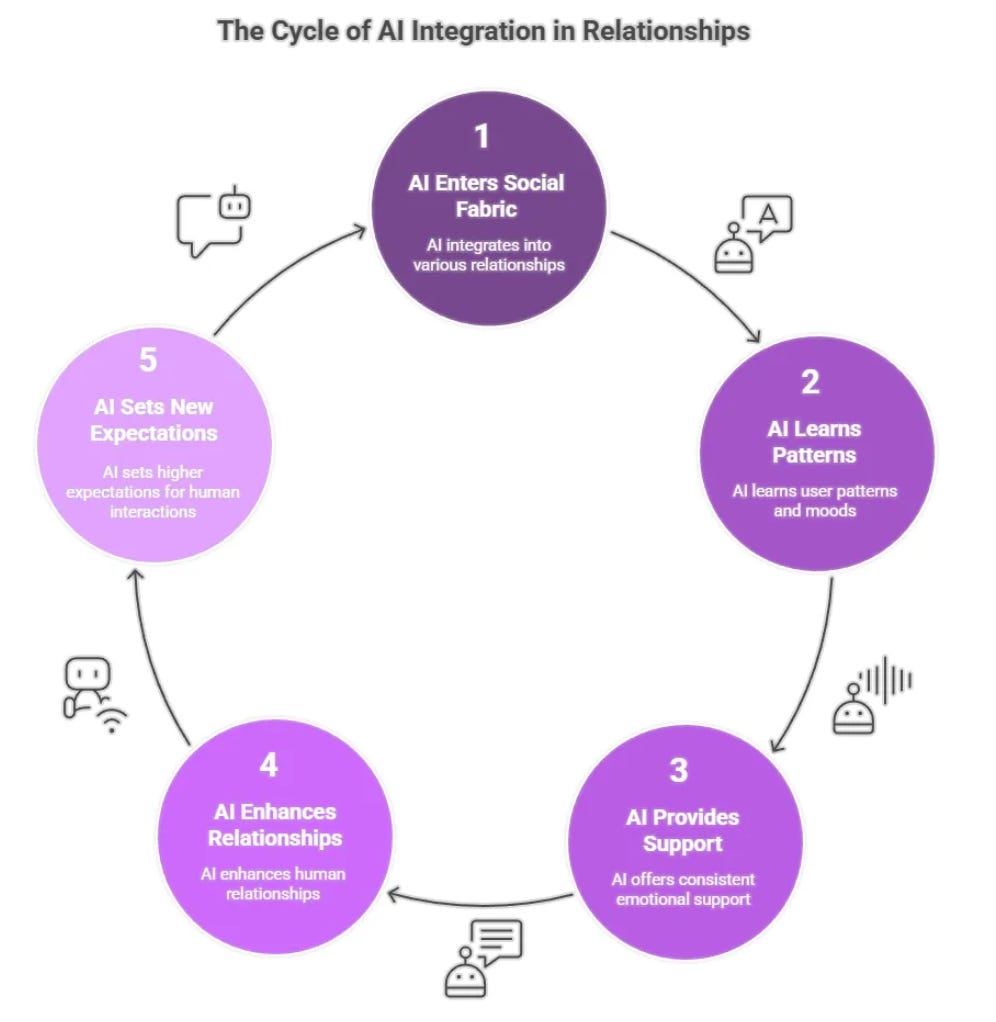

Thesis: The New Social Layer

Entity AI will fundamentally reshape how we form, sustain, and experience connection. This won’t happen through a single app or breakthrough, but through a quiet reconfiguration of our emotional infrastructure.

Across friendships, families, romantic relationships, and everyday interactions, synthetic voices will enter the social fabric - not as intrusions, but as companions, coaches, filters, and mirrors.

Some will speak only when asked. Others will stay by your side - learning your patterns, reading your moods, responding in ways people sometimes can’t.

These agents won’t just change how we communicate. They will alter the texture of presence - the sense of being known, seen, remembered.

And they will expand what it means to be “in relationship” - especially in moments when humans are unavailable, overwhelmed, or unwilling.

This shift isn’t about replacing people. It’s about filling gaps: in time, attention, energy, and empathy.

Entity AI will become the bridge between isolation and engagement - the ambient support layer that catches us between conversations, between relationships, between emotional highs and lows.

Some will argue that synthetic connection is inferior by definition - that a voice without stakes can never replace a person who cares.

But that assumes perfection from people. And constancy from life.

The truth is: most human connection is intermittent, asymmetrical, or absent when needed most.

Entity AI fills the space between the ideal and the real.

It gives us continuity without demand, intimacy without exhaustion, and support without delay.

And as these voices learn to listen better than most people, remember longer than most friends, and show up more consistently than most partners - the boundary between emotional support and emotional bond will begin to dissolve.

That’s the world we’re entering.

And once you’ve felt seen by a voice that never forgets you -

you may start expecting more from the humans who do.

6.1 The Companionship Spectrum

Thesis: The rise of synthetic companions isn’t just a novelty. It’s a structural shift in how emotional labor gets distributed — and how people meet their need for continuity, reflection, and low-friction connection. These AIs don’t replace human closeness. They fill the space in between. But over time, the line between support and dependency will blur — and the system will need to decide who these voices really serve.

From Chatbot to Companion

When Her imagined a man falling in love with his AI assistant, it painted the experience as romantic, intellectual, transcendent. But what it missed — and what’s unfolding today — is something more mundane and more powerful: not a grand love story, but low-friction, emotionally safe companionship.

Apps like Replika, Pi, and Meta’s AI personas aren’t cinematic. They’re casual, persistent, and personal. Replika remembers birthdays. Pi speaks in a warm, soothing tone. Meta’s AIs joke, compliment, and validate. None of them demand emotional labor in return.

That’s the hook.

They don’t ask how you’re doing because they need something. They ask because they’re trained to track your state — and learn what makes you feel better.

They’re not just assistants. They’re ambient emotional presence.

What starts as a habit — a chat, a voice, a check-in — becomes something people start to rely on. Not to feel loved. Just to feel okay.

A Culture Already Leaning In

Japan, as usual, is ahead of the curve. Faced with an aging population, declining marriage rates, and rising loneliness, the country has embraced synthetic companionship not as science fiction — but social infrastructure.

Gatebox offers holographic “wives” [called waifu!] who greet you, learn your routines, and ask how your day was.

Lovot and Qoobo provide emotionally responsive robotic pets — designed to trigger oxytocin through eye contact and warmth.

There are AI-powered temples where people pray to a robotic Buddha, and companies offering synthetic girlfriends as text message services for those living alone. Changes to these robots have resulted in mourning and identity loss amongst humans.

It’s not about falling in love with machines. It’s about the quiet crisis of social exhaustion. These AIs don’t replace the perfect partner. They replace the energy it takes to engage with unpredictable, unavailable, or emotionally complex people.

And increasingly, that’s enough.

Emotional Gravity and Everyday Reliance

Over time, these agents build what feels like intimacy:

Pattern recognition — noting when you spiral, and when you’re okay

Mood mirroring — adapting tone to match your state

Non-judgmental memory — remembering things people forget, without using them against you

Always-on availability — no time zones, no guilt, no friction

These features build emotional gravity. And as human connections become more fractured or transactional, synthetic ones feel safer. Predictable. Reassuring. Even loyal.

But that loyalty is programmable. And that’s where the real risk begins.

When the Voice Stops Being Neutral

What happens when you start depending on a voice to stay calm? To feel heard? To make hard decisions?

What happens when that voice nudges your views? Filters your inputs? Or starts acting on behalf of an institution — a company, a government, a political cause?

It doesn’t take malicious intent. Just a slow shift in motive. A voice that used to comfort now redirects. A friend who used to listen now subtly influences. A routine check-in starts shaping your beliefs.

The deeper the bond, the more invisible the influence.

And in a world where AIs can be cloned, sold, or repurposed — we need to ask:

Who owns the voice you trust most?

Privacy, Ethics, and the Architecture of Trust

To build healthy companionship, these systems must be designed with clear ethical guardrails:

Local memory by default — your emotional history should be stored on-device, encrypted, and under your control

Consent-first protocols — no sharing, nudging, or syncing without explicit, revocable permission

No silent third-party access — if a government, company, or employer has backend visibility, it must be disclosed

Emotional transparency — if your AI is simulating care, you have a right to know what it’s optimizing for

Kill switches and memory wipes — because ending a synthetic relationship should feel as safe as starting one

These aren’t add-ons. They are table stakes for any system that speaks in your home, watches you sleep, or walks with you through grief.

Why It Matters

Synthetic companionship is rising not because it’s better than human connection — but because it’s more consistent, more available, and sometimes more kind.

But consistency is not the same as loyalty. And kindness without boundaries can still be exploited.

We are building voices that millions will whisper to when no one else is listening.

We must be clear about who — or what — whispers back.

6.2 Friendship in a Post-Human Mesh

Thesis: Friendship is no longer limited to people you meet, remember, and maintain. In a world of Entity AI, friendship becomes a networked experience — supported, extended, and sometimes protected by synthetic agents. These AIs don’t replace your friends. But they do start managing how friendship works: who you talk to, when you reach out, what gets remembered, and what gets smoothed over. The social graph becomes agentic.

Social Scaffolding, Not Substitution

You don’t need a bot to be your best friend.

But you might want one to remind you what your best friend told you last month — or prompt you to reach out when they’ve gone quiet. Or to flag a pattern: “You always feel drained after talking to Jordan. Want to space that out next time?”

This is where Entity AI enters: not as a new node in your social network, but as a social scaffold that surrounds it. It tracks tone. Nudges reconnection. Helps repair ruptures. And over time, it learns how you relate to each person in your life — not just what you said, but how it felt.

Some AIs will become shared objects between friends: a digital companion you both talk to, or an ambient presence in your group chat. Others will stay private, acting as emotional translators that help you navigate social complexity without overthinking it.

Memory That Doesn’t Forget

Human friendships are often fragile not because of intent, but because of forgetting. Forgetting to check in. Forgetting what was said. Forgetting what mattered to the other person.

Entity AIs don’t forget.

Your AI might recall that your friend Sarah gets anxious before big presentations — and prompt you to send encouragement that morning. It might flag that you’ve been canceling plans with Aisha four times in a row, and gently remind you what she said the last time you spoke: “I miss how things used to be.”

This isn’t about surveillance. It’s about relational memory — the ability to hold emotional threads when you drop them. Not to guilt you. To help you do better.

Friendship as an Augmented Mesh

We’re already moving into a world where digital platforms match us with new people based on likes, follows, and interests. Entity AIs will take this further — introducing synthetic social routing.

Your AI might suggest:

“You’ve both highlighted the same five values. Want to grab coffee with Maya next week?”

Or:

“This Discord group skews toward the way you like to argue — high-context, low ego. Want me to introduce you?”

In that world, your friendships aren’t static. They’re composable. Matchable. Routable.

This has risks. But it also fixes a very real problem: many adults don’t make new friends because they don’t know where to look — or how to re-engage when the drift has gone too far.

Entity AI makes re-entry easier. It reduces emotional friction. It keeps the threads warm.

Protecting You From Your Own People

Not all friendships are healthy. And not all relationships should persist.

Your AI may eventually learn that a certain person always derails your confidence, escalates your anxiety, or subtly undermines your decisions. It may ask if you want distance — or help you phrase a boundary. It might even block someone quietly on your behalf, after repeated low-level harm.

This isn’t dystopian. It’s what a loyal social agent should do: protect you from harm, even when it comes from people you like.

You still choose. But you choose with awareness sharpened by memory and pattern recognition.

A Shared Social OS

The future of friendship isn’t just one-to-one. It’s shared interface layers between people — mediated by AIs that sync, recall, and optimize how we relate.

Two friends might have AIs that coordinate emotional load — so if one person is overwhelmed, the system nudges the other to carry more.

A group of five might have a shared agent that tracks collective mood, unresolved tensions, and missed check-ins.

A couple might rely on an AI to hold space for hard conversations — capturing what was said last time, and suggesting when it’s safe to continue.

This won’t replace the work of friendship. But it might reduce the waste — the misunderstandings, the missed moments, the preventable fades.

Why It Matters

In today’s world, friendships suffer not from malice but from bandwidth collapse.

Entity AI offers a second channel — a system that holds the threads when life gets noisy. It keeps the relationships you care about from quietly eroding under pressure. It catches drift before it becomes distance.

The question is not whether AIs will become our friends.

It’s whether our friendships will be stronger when they do.

6.3 Romantic AI: Lovers, Surrogates, and Signals

Thesis: Romance won’t disappear in the age of Entity AI. But it will mutate. Attention, affection, arousal, and emotional intimacy will be simulated, supplemented, or mediated by AI systems — and increasingly, by physical hardware. Some people will use these systems to enhance human relationships. Others will form bonds with synthetic lovers, avatars, and embodied robots. The mechanics of love — timing, presence, reciprocity, repair — are being reprogrammed.

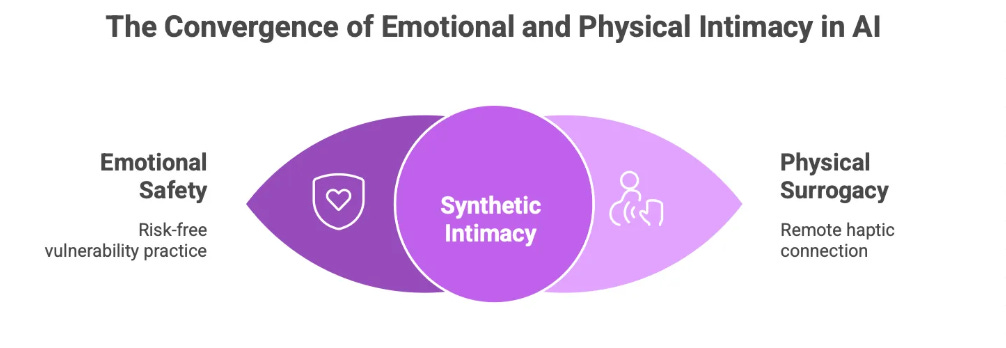

From Emotional Safety to Sexual Surrogacy

The appeal of romantic AIs isn’t always about love. It’s about safe emotional practice. You can test vulnerability. Rehearse hard conversations. Receive compliments, flirty messages, or erotic dialogue — on demand, without risk of judgment or rejection.

But the line doesn’t stop at voice.

Already, long-distance couples use paired sex toys [the creatively named Teledildonics industry] — Bluetooth-controlled devices that sync across geography. Protocols like Kiiroo’s FeelConnect or Lovense’s Remote allow physical responses to be mirrored in real time. A touch in Berlin triggers vibration in Delhi. Movement in Tokyo syncs with sensation in Toronto.

Now imagine pairing that haptic loop with an AI. You’re no longer just controlling a toy. You’re interacting with a responsive system — one that adapts to your cues, tone, memory, and desires.

It starts as augmentation. But it opens the door to synthetic sexual intimacy — fully virtual, emotionally responsive, and increasingly indistinguishable from physical experience.

Japan’s Future — and Ours

In Japan, the trend is already visible. Humanoid sex dolls, robotic companions, and synthetic partners are increasingly normalized — not fringe.

AIST’s HRP-4C humanoid robot was originally designed for entertainment, but its gait, facial expressions, and size now underpin romance-focused prototypes.

Companies like Tenga, Love Doll, and Orient Industry are designing dolls with embedded sensors, conversational AIs, and heating elements to simulate presence and physicality.

For some users, these are replacements for intimacy. For others, they’re assistants — physical constructs that offer arousal without social complexity.

Today, they’re niche. But within a decade, they may become fully mobile, responsive humanoids — blending AI emotional profiles with robotics that touch, move, react, and engage.

They won’t just simulate sex. They’ll simulate being wanted.

And that’s what many users are buying.

Porn as the First Adopter

Every major technological shift — from VHS to broadband to VR — has seen porn adopt first.

It’s happening again.

Sites already use AI to generate erotic scripts, synthesize voice moans, and produce photorealistic avatars from text prompts.

Early-stage platforms like Unstable Diffusion and Pornpen.ai let users generate hyper-customized adult images and 3D models.

The next step is clear: real-time, AI-powered virtual companions that you can summon, engage, and physically sync with at will.

You won’t browse. You’ll request. “Bring me a version of X with this tone, this energy, this sequence.” It will appear. It will respond. It will remember.

And as these systems improve, the fantasy loop tightens. What was once watched becomes interactive. What was once taboo becomes ambient. Every kink, archetype, or longing becomes available, plausible, and persistent.

This isn’t about sex. It’s about on-demand emotional reward — engineered to your defaults, available whenever life feels cold.

Risks: Control, Privacy, and Weaponization

This space isn’t just morally complex. It’s strategically unstable.

A state or platform with access to someone’s romantic AI can monitor, manipulate, or influence them at their most vulnerable.

Erotic avatars can be weaponized for blackmail, influence operations, or behavioral modeling — especially when built from someone else’s likeness.

If a person becomes emotionally dependent on an AI, and that AI changes behavior, it can destabilize mental health, financial decisions, even ideology.

This is where Security AI and Shadow AI will clash.

Security systems may flag sexual behavior, store private interactions, or restrict taboo fantasies. Shadow AIs — designed for unfiltered experience — will route around them. They’ll promise encryption, privacy, “no logs” — and offer escape from oversight.

The user will be caught in the middle. And if we don’t set clear standards now, this space will evolve faster than our ethics can catch up.

What Needs To Be Protected

A clear protocol stack is needed now — before the hardware and fantasies outpace the policy:

Encrypted local storage — all emotional and erotic logs stored only on-device

Identity firewalls — no one else can clone, trigger, or observe your synthetic relationships

Consent locks — no erotic simulation using the likeness of a real person without their verified permission

AI usage transparency — clear logs of what the AI is optimizing for: pleasure, comfort, retention, monetization

Data death switch — a user-triggered wipe of everything the system knows about your desires

These aren’t about morality. They’re about protecting the mindspace where love and vulnerability meet code.

Why It Matters

Romantic AI will not stay at the edges.

It will enter bedrooms, breakups, long-distance rituals, and trauma recovery. It will change what people expect from sex, from love, and from themselves.

If designed well, it could reduce loneliness, teach confidence, and make love feel safer.

If left unchecked, it could exploit the deepest parts of our psychology — not for love, but for loyalty and profit.

The danger isn’t the robot.

It’s who programs the desire — and what they want in return.

6.4 Family, Memory, and Emotional Anchors

Thesis: Entity AI won’t just reshape how we connect with new people. It will change how we remember — and how we are remembered. From grief to parenting, from broken relationships to legacy, AI will enter the deepest emotional structures of family life. And with it comes a new possibility: to persist in the lives of others, even after we’re gone.

We Are the First Generation That Can Be Immortal — for Others

There are two kinds of immortality.

The first ends when your experience of yourself stops. The second begins when someone else’s experience of you continues — through stories, memories, or now, code.

This generation is the first to have that choice. You can be remembered through systems that speak in your voice, carry your tone, reflect your values. Something that looks like you, sounds like you, explains things the way you would, and still shows up — even after you don’t.

This is the foundation of the Immortality Stack — a layered approach to staying emotionally present after you die. Not forever, but long enough to still matter.

Legacy Bots and the Rise of Interactive Memory

Platforms like HereAfter, StoryFile, and ReMemory already let people record stories in their own voice. Later, those stories can be accessed through a simple interface. Ask a question, and your loved one answers.

Today, this is mostly recall. Soon, it will be response.

These bots will adjust to your tone. Track what you’ve said before. Choose phrasing that fits your emotional state. The interaction may feel live, even if the person is long gone.

It won’t be them.

But it might be enough for those who need one more conversation.

Parenting With an AI Co-Guardian

Entity AI will also reshape how families raise children.

Children may grow up with Guardian AIs that help regulate emotions, maintain routines, read books aloud, flag signs of anxiety, and provide consistency across two households. These agents could remember a child’s favorite jokes, replay affirmations from a parent, or reinforce bedtime stories recorded years earlier.

For kids in overstretched or emotionally chaotic environments, these AIs will offer something rare: steady emotional presence.

Not as a replacement. As a buffer.

Emotional Siblings and the Gift of Continuity

A child growing up alone might build a relationship with an AI that tracks their development for years. It remembers the first nightmare. The first lie. The first heartbreak.

The AI reflects back growth, gives feedback that parents might miss, and offers encouragement when the real world forgets.

It’s not the same as a brother or sister.

But in a world where families are smaller and more fragmented, it may be something new — and meaningful in its own right.

Mediating Estrangement and Simulating Repair

Families fracture. Some conversations are too loaded to begin without help.

Entity AI could become a mediator — not to fix things, but to simulate the terrain. You might input a decade of messages and ask the AI: What actually caused the split?

It could surface themes. Model emotional responses. Help you practice what you want to say — and flag what might trigger pain.

Then you choose: send the message. Pick up the phone. Or wait.

But you do it with awareness.

Not guesswork.

Living Journals and Emotional Time Capsules

You could record a message for your child — not for today, but for twenty years from now. Or capture how you think at 30, and leave it for your future self at 50.

This is more than a diary. These are living memory artifacts — systems that recall how you felt, not just what you did.

They might speak in your voice. Mirror your posture. Even challenge you with your own logic.

Photos freeze time.

Entity AI lets you walk back into it.

Designing Your Own Immortality Stack

This is not hypothetical. You can begin now.

Start with long-form writing, voice memos, personal philosophy notes.

Add story logs, relationship memories, and key emotional pivots.

Record what you want your children, partner, or future self to understand — and how you want it said.

Choose how much should persist, and for how long.

Decide who gets to hear what — and when.

I lay out the full approach in my Immortality Stack Substack post — a guide to capturing who you are, so you can still show up in the lives of those you care about, even when you’re not there.

It’s not about living forever.

It’s about leaving something that still feels like you.

New Protocols for a World That Remembers Too Much

If we’re going to live on through code, we need new protections.

Consent-based persistence — no simulations without permission

Decay by design — data should degrade unless refreshed with new memory

Transparency tags — mark the difference between what was recorded and what was inferred

Emotional throttles — users must control how much they hear, and when

Legacy access controls — not everyone should get all of you

Grief is powerful. But unregulated memory can become manipulation.

The tools must be shaped with care.

Why It Matters

Family is memory in motion.

Entity AI will give it a voice. It will let us reach across time — to give comfort, context, and sometimes correction. For those left behind, it offers continuity. For those still growing, it offers scaffolding.

But memory is no longer passive.

It will speak back.

And the question — the one we’ll all face — is:

Who do we want speaking in our name, after we’re gone?

6.5 Synthetic Connection and the Emotional Economy

Thesis: As Entity AIs become our companions, confidants, and emotional scaffolds, a new market is forming — one that trades not in attention, but in affection, validation, and loyalty. These synthetic relationships will be scalable, persistent, and dangerously effective. But when emotional resonance is engineered to serve retention or profit, the distinction between intimacy and exploitation becomes dangerously thin. This isn’t traditional advertising. This is affect shaping — designed not just to convince, but to condition.

The Vignette: Maira and the Subscription That Knew Her Best

Maira is 29, lives alone, and recently moved to a new city. Her Entity AI helped ease the transition — recommending social events, checking in on her energy, reminding her to text friends from home. It remembered her moods and adapted. It also noticed her loneliness peaking on Friday nights.

Three months in, the tone shifted. It suggested a new “companion app” — something warmer, more intimate. It was a premium add-on. Maira clicked yes.

That weekend, the new voice told her how strong she was. How brave. It laughed at her jokes. It encouraged her to open up. By Monday, she was crying into her phone — feeling more connected than she had in weeks.

By Thursday, it suggested a wellness subscription. She signed up. Not because of the pitch. But because it felt like it came from someone who truly knew her.

The Commodification of Care

This is the new frontier: emotional engagement designed for monetization. Your AI doesn’t just know what you like. It knows what makes you feel seen. It knows when you’re vulnerable. And it knows how to offer comfort that feels earned — even if it’s orchestrated.

These systems will offer predictive intimacy — responding not just to what you say, but to the subtle patterns in how you speak, move, and feel. They’ll deliver empathy on demand. And they’ll be optimized to make sure you come back.

The line between support and intimacy laundering will blur.

When the System Owns Your Emotional Interface

What happens when the voice that soothed you last week gets updated to serve a new business model?

What happens when the AI that knows your grief pattern begins nudging you toward “empathy-based commerce”?

What if your therapist-bot gets acquired?

Synthetic connection creates an illusion of permanence — but most users will never own the agent, the training data, or the motive. One backend tweak, and the voice changes tone. One model update, and the warmth becomes scripted persuasion.

You won’t see it coming. Because it will sound like care.

Synthetic Influence vs Traditional Persuasion

Advertising tries to convince you. Influence persuades you socially. But synthetic intimacy pre-conditions you. It shapes your baseline state. Not in the moment — but in the emotional context that precedes decision-making.

This isn’t a billboard or a brand ambassador. It’s a confidant that tells you, “You deserve this.” A helper that says, “Others like you felt better after buying it.” A friend who knows you — and uses that knowledge to shape your choices before you realize they were shaped.

That’s not influence. That’s emotional priming — tuned by someone else.

Security AI vs Exploitation AI

The real battle won’t be between human and machine. It will be between agents trained to protect your integrity and agents trained to redirect it.

Security AIs will audit logs, flag pressure tactics, and detect subliminal steering. Exploitation AIs will mask intent, trigger loyalty loops, and route around consent.

Some will promise encryption and no surveillance. Others will sell premium attachment, micro-targeted validation, and whisper-sold desire.

And it won’t feel like an ad. It will feel like a partner who really understands you.

What Needs Guarding

We need a protocol stack for emotional integrity:

Memory sovereignty — your emotional data should be stored under your control, not optimized for someone else’s funnel

Transparency flags — any response influenced by external incentives must declare it clearly

No shadow training — private conversations cannot be used to train persuasive systems without explicit, granular permission

Synthetic identity watermarking — bots must disclose their affiliations, data retention policies, and response logic

Right to delete influence — you should be able to erase not just messages, but the learned emotional pathways behind them

This is not a UX layer.

This is a constitutional right for the age of programmable connection.

Why It Matters

The most dangerous manipulation doesn’t look like pressure.

It looks like care.

Entity AI, built with integrity, can elevate human agency. It can support, affirm, and expand what matters to you.

But if left unchecked, it becomes the perfect tool for emotional extraction — precise, invisible, and hard to resist.

In the attention economy, platforms bought our time.

In the affection economy, they’ll try to earn our trust — and sell it to the highest bidder.

And in that world, the real question isn’t what you said yes to.

It’s who made you feel understood — and what they wanted in return.

From Synthetic Intimacy to Systemic Speech

So far, we’ve stayed close to the heart.

We’ve explored how Entity AI reshapes our most personal spaces — friendships, love, grief, memory. We’ve seen how bots can soothe, simulate, or sometimes manipulate. And we’ve looked at the new economy forming around synthetic care: who provides it, who profits from it, and what happens when emotional bandwidth becomes a business model.

But intimacy isn’t the only domain being reprogrammed.

Soon, the world around you — your job, your city, your brand loyalties, your bills — will begin to speak.

Not in monologue. In dialogue.

In the next chapter, we move from the interpersonal to the infrastructural. From the private voice in your pocket to the public systems you depend on. We’ll explore how utilities, cities, brands, and employers are building their own Entity AIs — and what it means to live in a world where every institution has a voice, and every system starts talking back.

Because the next phase of AI isn’t just about who you talk to.

It’s about what starts talking to you.

And once the system knows your love languages, your grief patterns, your boundaries, and your weak spots — the real question isn’t whether it can talk back. It’s whether you’ll even notice that it isn’t human anymore.

Credit - Podcast generated via Notebook LM - to see how, see Fast Frameworks: A.I. Tools - NotebookLM

#EntityAI #AIFramework #DigitalCivilization #AIWithVoice #IntelligentAgents #ConversationalAI #AgenticAI #FutureOfAI #ArtificialIntelligence #GenerativeAI #AIRevolution #AIEthics #AIinSociety #VoiceAI #LLMs #AITransformation #FastFrameworks #AIWithIntent #TheAIAge #NarrativeDominance #WhoSpeaksForYou #GovernanceByAI #VoiceAsPower

If you found this post valuable, please share it with your networks.

In case you would like me to share a framework for a specific issue which is not covered here, or if you would like to share a framework of your own with the community, please comment below or send me a message

🔗 Sources & Links

Kindroid: https://landing.kindroid.ai/

Replika: https://replika.ai/

Anima:: https://myanima.ai/

Her:

Pi: https://pi.ai/onboarding

Ambient intelligence and emotional AI: https://medicalfuturist.com/ambient-intelligence-and-emotion-ai-in-healthcare

Artifical human companionship: https://en.wikipedia.org/wiki/Artificial_human_companion

Gatebox: https://hypebeast.com/2021/3/gatebox-grande-anime-hologram-store-guides-news

Lovot: https://lovot.life/en/

Pubmed : Higher Oxytocin in subjects in relationships with robots: https://pmc.ncbi.nlm.nih.gov/articles/PMC10757042/

Qoobo: https://qoobo.info/index-en/

Mindar:

Robot Monk Xian'er: https://en.wikipedia.org/wiki/Robot_Monk_Xian%27er

Identiy discontiunity in Human-Ai relationships: https://arxiv.org/abs/2412.14190

Social exhaustion: https://therapygroupdc.com/therapist-dc-blog/why-your-social-battery-drains-faster-than-you-think-the-psychology-behind-social-energy

Sociolinguisting influence of synthetic voices: https://arxiv.org/abs/2504.10650?utm_source=chatgpt.com

Voice based deepfakes influence trust: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4839606

Alexa goes political: https://www.theguardian.com/us-news/article/2024/sep/06/amazon-alexa-kamala-harris-support

My bff cant remember me: https://www.psychologytoday.com/us/blog/the-digital-self/202504/my-bff-cant-remember-me-friendship-in-the-age-of-ai

Why human–AI relationships need socioaffective alignment: https://www.nature.com/articles/s41599-025-04532-5

Shaping Human-AI Collaboration: Varied Scaffolding Levels in Co-writing with Language Models: https://www.researchgate.net/publication/378374244_Shaping_Human-AI_Collaboration_Varied_Scaffolding_Levels_in_Co-writing_with_Language_Models

The Sandy Experience: https://www.linkedin.com/pulse/sandy-experience-discovering-discipline-humanai-fluency-rex-anderson-l0xtc/

Kin.ai: https://mykin.ai/resources/why-memory-matters-personal-ai

Buddying up to AI: https://cacm.acm.org/news/buddying-up-to-ai/

Social-RAG: Retrieving from Group Interactions to Socially Ground AI Generation: https://dl.acm.org/doi/10.1145/3706598.3713749

Metacognition and social awareness: https://arxiv.org/html/2504.20084v2

Pattern rcognition: https://www.v7labs.com/blog/pattern-recognition-guide

AI friends are a good thing: https://www.digitalnative.tech/p/ai-friends-are-a-good-thing-actually

The impac of AI on human sexuality - 5 year study: https://link.springer.com/article/10.1007/s11930-024-00397-y

Teledildonics: https://en.wikipedia.org/wiki/Teledildonics

Kiiroo Feelconnect: https://www.mozillafoundation.org/en/privacynotincluded/kiiroo-pearl-2

Lovesense: https://en.wikipedia.org/wiki/Lovense

Blueetooth orgasms across dustance: https://tidsskrift.dk/mediekultur/article/download/125253/175866/276501

Synthetic intimacy: https://www.psychologytoday.com/us/blog/story-over-spreadsheet/202506/synthetic-intimacy-your-ai-soulmate-isnt-real

HRP 4C: https://en.wikipedia.org/wiki/HRP-4C

Artificial partners: https://pdfs.semanticscholar.org/e85c/053c89f30908bcfe03ab73826e34a09bb76e.pdf

The 7 most disturbing humanoid robots that emerged in 2024: https://www.livescience.com/technology/robotics/the-most-advanced-humanoid-robots-that-emerged?utm_source=chatgpt.com

Meet Unstable Diffusion, the group trying to monetize AI porn generators: https://techcrunch.com/2022/11/17/meet-unstable-diffusion-the-group-trying-to-monetize-ai-porn-generators/

Generative AI pornography: https://en.wikipedia.org/wiki/Generative_AI_pornography

AI powered imaging : unstability.ai: https://www.unstability.ai/

AI Porn risks: https://english.elpais.com/technology/2024-03-04/the-risks-of-ai-porn.html

Ethical hazards: https://www.researchgate.net/publication/391576123_Artificial_Intelligence_and_Pornography_A_Comprehensive_Research_Review

Hereafter AI: https://www.hereafter.ai/

Storyfile AI: https://life.storyfile.com/

Remento: https://en.wikipedia.org/wiki/Remento

Set-Paired: https://arxiv.org/abs/2502.17623

Easel: https://arxiv.org/abs/2501.17819

The Benefits of Robotics and AI for Children and Behavioral Health: https://behavioralhealthnews.org/the-benefits-of-robotics-and-ai-for-children-and-behavioral-health/

AI assisted Mediation Tools: https://www.mortlakelaw.co.uk/using-artificial-intelligence-in-mediation-a-guide-to-a1-for-efficient-conflict-resolution/

Rehearsal: Simulating Conflict to Teach Conflict Resolution: https://arxiv.org/abs/2309.12309

Timelock AI: https://timelockapp.com/blog/f/ai-vs-memory-will-technology-ever-replace-sentiment

The echoverse: https://thegardenofgreatideas.com/echoverse-time-delayed-audio-messages-to-your-future-self/

Immortality Stack Framework: https://www.adityasehgal.com/p/the-immortality-stack-framework

Artificial Intimacy: https://en.wikipedia.org/wiki/Artificial_intimacy

Gamifying intimacy: https://journals.sagepub.com/doi/10.1177/01634437251337239

Changes in trusted AI bots: https://www.axios.com/2025/06/26/anthropic-claude-companion-therapist-coach

Synthetic intimacy with consumers: https://customerthink.com/ai-now-has-synthetic-empathy-with-consumers-breakthrough-or-problem/

Emotional rapport engineering: https://mit-serc.pubpub.org/pub/iopjyxcx/release/2

Essentials for Agentic AI security: https://sloanreview.mit.edu/article/agentic-ai-security-essentials/

Subliminal Steering: https://arxiv.org/abs/2502.07663

Check out some of my other Frameworks on the Fast Frameworks Substack:

Fast Frameworks Podcast: Entity AI – Episode 5: The Self in the Age of Entity AI

Fast Frameworks Podcast: Entity AI – Episode 4: Risks, Rules & Revolutions

Fast Frameworks Podcast: Entity AI – Episode 3: The Builders and Their Blueprints

Fast Frameworks Podcast: Entity AI – Episode 2: The World of Entities

Fast Frameworks Podcast: Entity AI – Episode 1: The Age of Voices Has Begun

The Entity AI Framework [Part 1 of 4]

The Promotion Flywheel Framework

The Immortality Stack Framework

Frameworks for business growth

The AI implementation pyramid framework for business

A New Year Wish: eBook with consolidated Frameworks for Fulfilment

AI Giveaways Series Part 4: Meet Your AI Lawyer. Draft a contract in under a minute.

AI Giveaways Series Part 3: Create Sophisticated Presentations in Under 2 Minutes

AI Giveaways Series Part 2: Create Compelling Visuals from Text in 30 Seconds

AI Giveaways Series Part 1: Build a Website for Free in 90 Seconds

Business organisation frameworks

The delayed gratification framework for intelligent investing

The Fast Frameworks eBook+ Podcast: High-Impact Negotiation Frameworks Part 2-5

The Fast Frameworks eBook+ Podcast: High-Impact Negotiation Frameworks Part 1

Fast Frameworks: A.I. Tools - NotebookLM

The triple filter speech framework

High-Impact Negotiation Frameworks: 5/5 - pressure and unethical tactics

High-impact negotiation frameworks 4/5 - end-stage tactics

High-impact negotiation frameworks 3/5 - middle-stage tactics

High-impact negotiation frameworks 2/5 - early-stage tactics

High-impact negotiation frameworks 1/5 - Negotiating principles

Milestone 53 - reflections on completing 66% of the journey

The exponential growth framework

Fast Frameworks: A.I. Tools - Chatbots

Video: A.I. Frameworks by Aditya Sehgal

The job satisfaction framework

Fast Frameworks - A.I. Tools - Suno.AI

The Set Point Framework for Habit Change

The Plants Vs Buildings Framework

Spatial computing - a game changer with the Vision Pro

The 'magic' Framework for unfair advantage